Google Assistant's 6 new features you need to know about

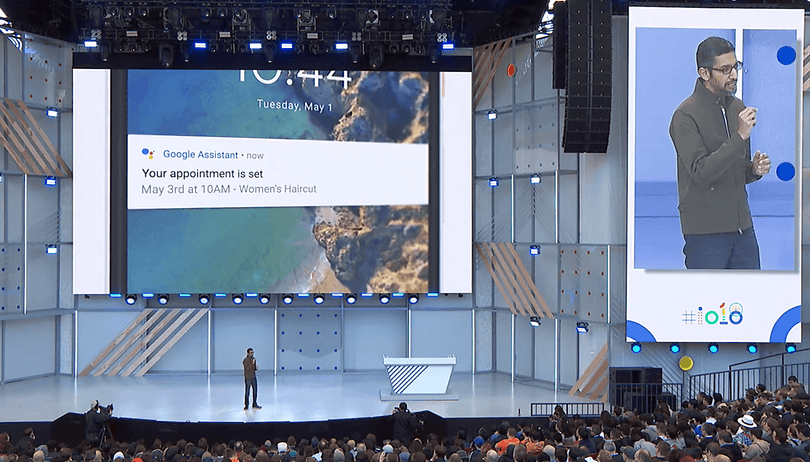

Do you remember in 2016 when Google introduced us to its newest Google Assistant? Some time has passed and it has grown a little since then. During the Google I/O 2018, the Mountain View tech giant presented its plans for its prodigy son.

Google’s little assistant has managed to win the heart (or managed to find its way into the smartphone) of many users and is now subject to the most fundamental Darwinian principle: either adapt or go extinct. So Google has decided to try and evolve. In this article we’ll go through all of the main projects that the company unveiled for its assistant at the keynote.

Need to catch up on the latest from Google? Check these highlights from I/O that will change your life:

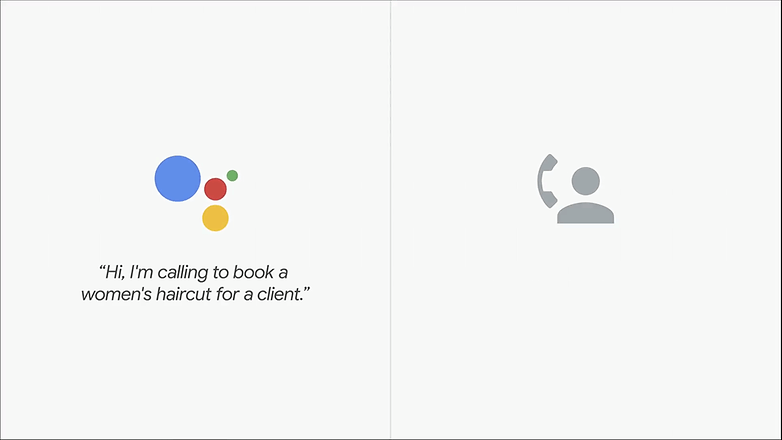

1. Google Assistant is going to learn how to make phone calls

This was a keynote address so it’s better to take what we’ve learned with a grain of salt. The keynote featured a demonstration: an individual called and the Assistant answered with such smoothness that it was impossible to tell it was a machine. This feat requires a much more elaborate mastery of artificial intelligence than we knew of before today, although Google stated they "have been working on this technology for many years”.

2. Google Assistant is mutating (with multiple voices)

The brave little voice assistant will be able to change its vocal stylings as you please. This is important because voice interactions are Google Assistant’s primary means of communicating with us. Google has stated that the voice must sound “personal and natural”. John Legend will be one of 6 people to offer his voice in English. Over time, there will probably be more than 6 voices available.

3. Google Assistant will be taking speech classes

If you’re used to using Google Assistant you’ve probably noticed that its voice sometimes doesn’t sound very natural (and sometimes it doesn’t understand my accent!), and that’s precisely what Google is trying to improve. Thanks to progress in the field of artificial intelligence, communication will be simpler. It isn’t only a question of understanding or being understood, but also a matter of fluency. Google should, for example, abandon the famous (but annoying) ‘OK Google’, which currently must precede all the commands after it.

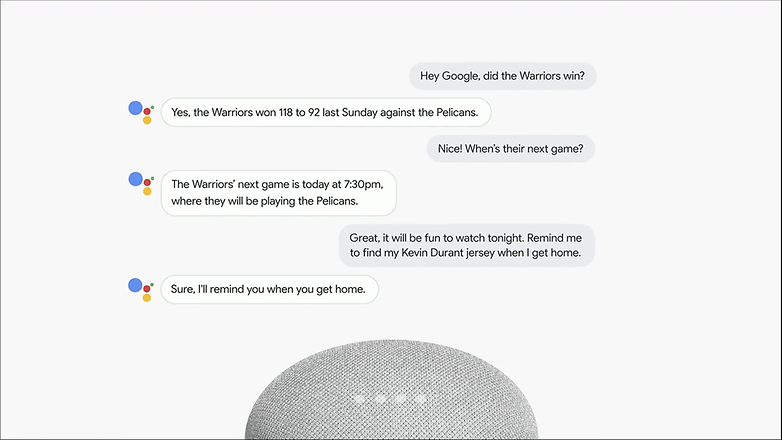

4. Google Assistant will learn multitasking

At the moment the little assistant can only do things one at a time. This is good, but it isn’t enough, since it can sometimes seem unnatural. Google will therefore teach its newest assistant how to take two requests at the same time. Personally, I’d prefer Google Assistant could better respond to normal queries before teaching it how to do multiple ones, but Google is likely to kill two birds with one stone here.

5. Google Assistant will get a screen

Although it doesn’t have its own vocal cords, the voice is the most valuable element that Google Assistant has. It will, however, get its own screen on devices. These screens will be known as smart displays. In short, this will allow you to interact with Assistant directly by tapping the device instead of using a voice command.

Assistant will show you a preview of your day on your smartphone and add some suggestions using various Google services. These changes are to be implemented before the end of the year in the states. Google is also planning to introduce a delivery service in the U.S..

6. Google Assistant will allow to interact with it while you’re driving

Google Assistant will let you play music, send/receive messages, or get information directly without even having to leave Google Maps. In case you were wondering: yes, that’s legal. Time will tell how this can affect the concentration of motorists behind the wheel.

Which of these features are you most interested in? Let us know in the comments!

Well. seems fantastic

Woww...that was great news

Buy one of these things after you read about the latest finding.

Researchers in the U.S. and China have discovered ways to send hidden commands to digital assistants—including Apple’s Siri, Amazon’s Alexa, and Google’s Assistant—that could have massive security implications.

In laboratory settings, researchers have been able to activate and issue orders to the systems via means that are undetectable to the human ear, according to a new report in The New York Times. That could, conceivably, allow hackers to use the systems to unlock smart locks, access users’ bank accounts, or access all sorts of personal information.

A new paper out of the University of California, Berkeley said inaudible commands could be imbedded into music or spoken text. While at present this is strictly an academic exercise, researchers at the university say it’s foolish to assume hackers won’t discover