Google engineer claims company's AI chatbot has developed consciousness

Read in other languages:

One of Google's engineers is now on administrative leave after he concluded in his report that the LaMDA (Language Model for Dialogue Applications) AI-based chatbot has developed consciousness. Blake Lemoine, a Google software engineer and self-proclaimed AI ethicist, held a lengthy internal interview with the company's conversational AI system, before claiming that the system is sentient.

TL;DR

- Google's LaMDA AI-based chatbot himself claimed to have developed consciousness

- A software engineer of Google believes this to be true after conversations with LaMDA

- Google has so far denied the claim

Representatives from Google have denied the statement of its engineer during an interview with The Washington Post. According to Brian Gabriel, spokesperson of the company, there was no evidence to support the allegations of Lemoine in regards to LaMDA being conscious or sentient.

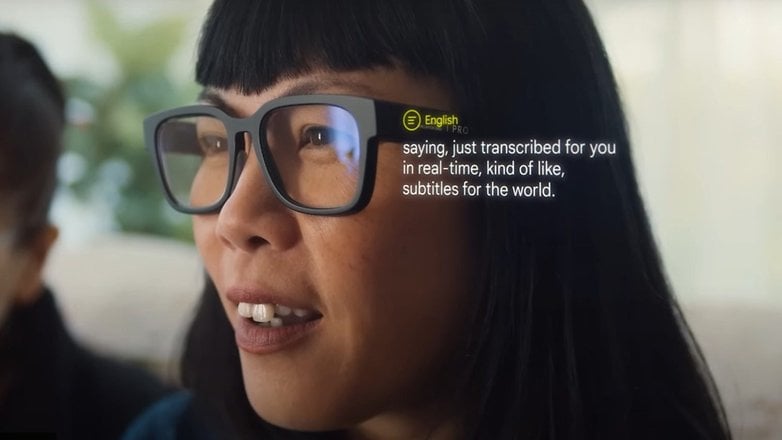

- Also interesting: Google demos smart AR glass prototype with live translation

The former co-lead of Ethical AI in Google, Margaret Mitchell, also seconded that Lemoine has been affected by one of the problems brought by large language models. Specifically, she referred to the fact humans are prone to the illusion created through artificial realities inside their mind. And given that AI has become more capable, these systems can easily recreate conversations more natural to human.

What is Google LaMDA?

Google has been developing LaMDA for years now. It's a conversational AI system launched in 2021 and succeeds Meena. In addition, it is based on a company's open-source neural network called Transformer which was introduced in 2017.

The current large language model of Google, which is now on its second version, was trained with 1.56 trillion words from public web data and documents to produce sensible, specific, factual, and interesting dialogue. This is in contrast to OpenAI's GPT-3 that "only" utilizes up to 175 billion parameters.

- Don't miss: How to Fix "Ok Google"

Google's ultimate goal is to put its conversation AI system in all of its services including search. However, because of the ability of LaMDA to create unique conversations it makes it more advanced compared to other systems. It's also another reason why Lemoine thinks that LaMDA develops its own emotions and feelings – just like a human being.

While there are a lot of skeptics of Lemoine's claims, some consider this an eye-opener to the threats that these AI systems pose – whether they're really conscious or just really convincing. What do you think the future of AI will be? Hit us up in the comment section.

Source: Washington Post