48 MP or not? A look at Sony's new camera sensor

A lot has been said in past few weeks about Sony's new 48 MP sensor (called IMX586), which is used in the recently released Honor View20. As a photography enthusiast, I was intrigued and I must admit that the more I dug into the issue, the more some of the information did not add up.

Before we start

I want to note that I'm not trying to push for or against certain smartphone manufacturers in this article. I'm not even attacking Sony for its work. I'm sure the company's engineers have valid reasons every every choice made in the design of the sensor. I invite them, in case they are reading this article, to correct me or explain these choices.

Obviously comments and objections from you readers are also welcome, as long as you keep the conversation civil and respectful.

Is the Sony IMX586 really a 48 MP sensor?

In theory, yes, in practice, not so much. Reading the specsheet published by Sony, you can find the phrase "48 effective megapixels" everywhere. The table shows a resolution of 8000x6000 pixels which actually equals that number. But let's take a closer look at the sensor...

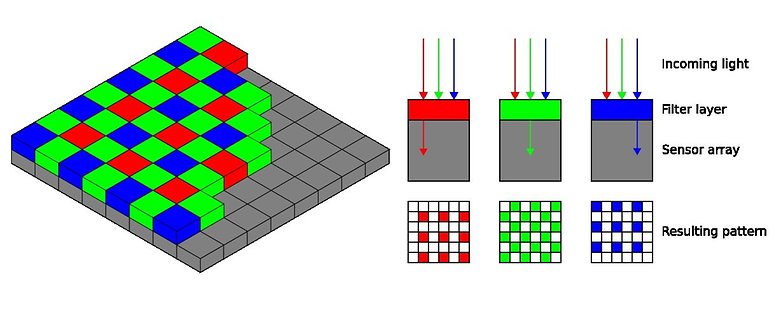

In a sensor that uses a Bayer array pixel arrangement, each individual photoreceptor is physically filtered so that only green, red or blue light wavelengths can pass through (a common practice that mimics the way the human eye operates). The sensor electronics are then responsible for transforming groups of adjacent pixels into the actual color that has been "perceived" by the individual pixel by borrowing information from neighbors and using some good old math. This is done by taking the values of two green pixels (G) averaged, one red pixel (R) and one blue pixel (B).

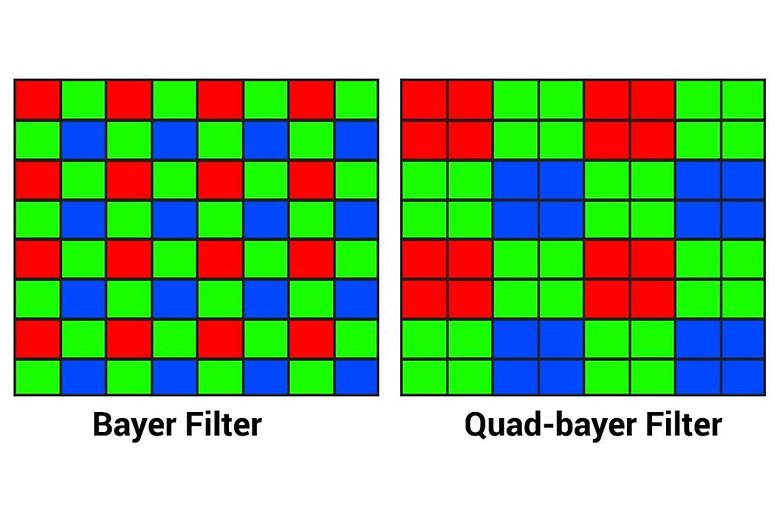

On the IMX586 Sony uses a pixel arrangement called Quad Bayer array, which is nothing more than a classic Bayer Array arrangement (used on virtually every existing smartphone sensor) but where instead of every single pixel four pixels have been placed to form a 2x2 square. This article by our colleagues at GSM Arena explains perfectly how the Quad Bayer matrix works on the Huawei P20 and P20 Pro smartphones.

Now, you can undestand how grouping 4 pixels of the same color next to each other in a Quad Bayer matrix is almost equivalent to having a sensor with a Bayer matrix with double-sized pixels (measured on the side, quadruple as an area). Knowing this, the Sony IMX586 is closer to the definition of 12 MP than 48 MP as claimed.

However, we can't say that the information is wrong, because each individual photoreceptor is separate from the one adjacent to it, so technically there are 48 million ...

So much marketing

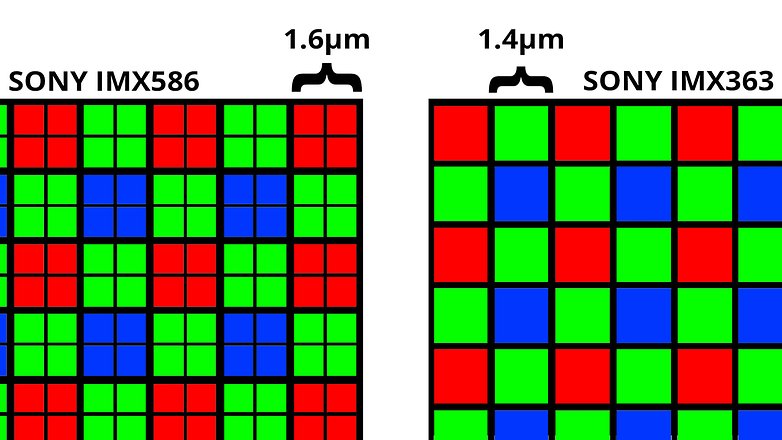

Sony claims that this configuration allows both high sensitivity and high definition for its sensor. I can't say anything about the sensitivity: by grouping 4 pixels of the same color together you get the equivalent of a single 1.6-micron photoreceptor, instead of the (poor) 0.8-micron single light receptor.

What you have to remember, however, is that this is only slightly larger when compared to the more common Sony IMX363 which has pixels the size of 1.4 microns, not to mention that in that case the pixels really have that size and are not interpolated. On the IMX586, taking into account the Quad Bayer array (to simplify, the grouped version resulting in 12MP) the simulated photoreceptors are only 30% larger than the IMX363. On the contrary, if we take into account the individual pixels, we have a 67% smaller area.

However, Sony says that it only uses the native resolution for high brightness because such small pixels really need a lot of light to perform properly. The smaller the photoreceptor, the more likely it is that noise will appear (resulting in degradation of image quality) as the brightness drops. When the brightness is not the best, the pixel grouping system is used to reduce the noise and increase the overall sensitivity of the sensor (best SNR value) at the expense of the resolution.

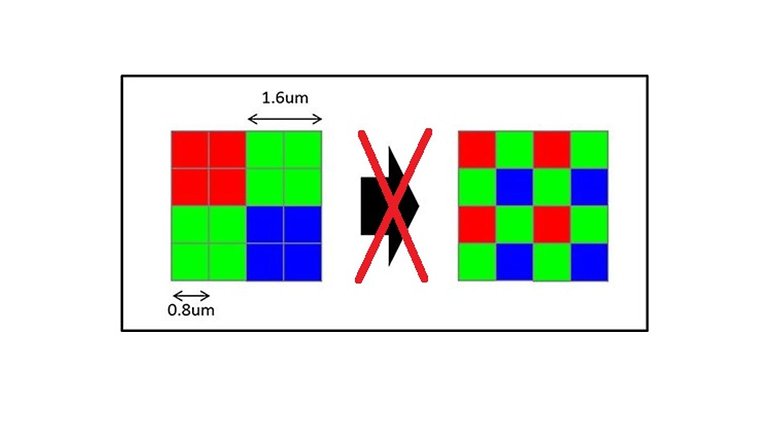

As we have seen before, however, the photoreceptors are arranged using a Quad Bayer matrix and this is where my biggest doubt arises. Since these pixels are physically filtered by certain light frequencies, it is not possible to move and transform a Quad Bayer matrix into a Bayer matrix to take full advantage of the 48 MP. The individual photoreceptors would need to be physically moved.

The result is therefore only an approximation of what a true 48 MP sensor with a Bayer matrix would be able to capture. How come? Because it is more difficult to determine the color captured by individual pixels when relying on information from neighbours who likely share the same color.

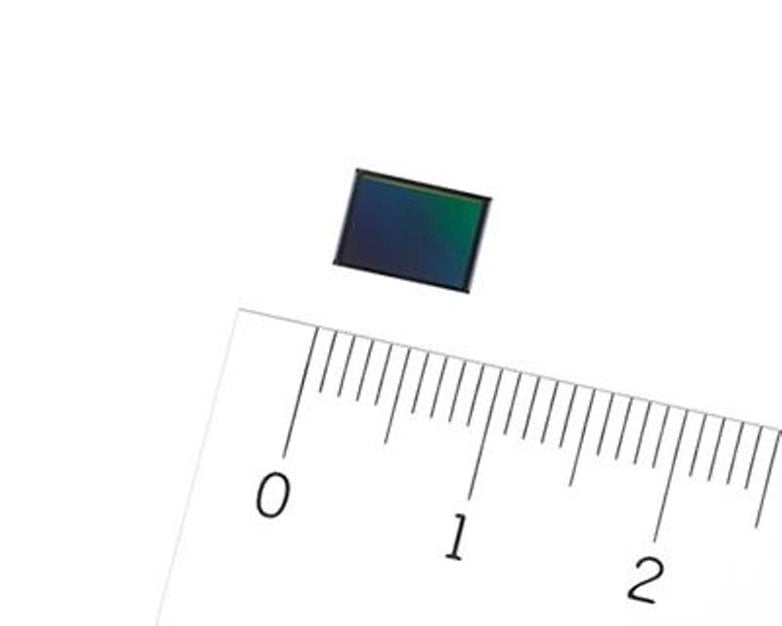

Size matters

The Sony IMX586 has a surface of 30mm² and this where it must stuff all of its 48 million pixels. The sensor is therefore smaller than the 40 MP one used on the Huawei P20 Pro or Mate 20 Pro, which measures at 45mm² (7.76x5.82mm) and shares the same Quad Bayer matrix. Not only that, but the now extinct Nokia Lumia 1020 (41 MP, 58mm²) and Nokia 808 (41 MP, 85mm²) are doing much better, with the Symbian OS device still reigning supreme with the largest sensor ever inserted into the body of a smartphone (excluding the Panasonic Lumix DMC-CM1 experiment).

In addition, many other factors must be taken into account, such as the lenses used and the processing that is done to the data collected by the sensor. For example, the image processing done by Honor and its Kirin 980 on the View20 newcomer could be very different from that performed by competitors with different chips.

For now, I have to admit, I wasn't impressed. I used an Honor View20 in Berlin and during the winter most days are dominated by the color gray. I was able to get pleasant images in broad daylight only by taking advantage of the 12 MP capture modes (however not at the level of other smartphones I have tested). In comparison, the photos I took with the 48 MP mode were mostly unusable. The sensor seems to lose a lot of quality at the edges of photos and I also noticed a lot of noise when zooming. Would you really buy a smartphone to take pictures only during the day and only on sunny days?

Below you can see two examples of photos taken at 48MP during the day and at night in less than ideal conditions:

I'm not saying that you should avoid buying smartphones that use the Sony IMX586 sensor, but it seems fair that you are aware that:

- More megapixels do not necessarily result in better photos.

- The sensor is not larger (and therefore does not capture more light) than the competition.

- The software and hardware that accompany it can make a huge difference in the era of computational photography.

- You don't have to be fooled by the marketing that revolves around these huge numbers of pixels.

- If the flagships (which are constantly under the magnifying glass of the fans) do not use this sensor, maybe there is a reason for this.

- This is only my opinion ?

Now, after reading my personal outburst supported by the data I was able to collect during my research, feel free to challenge me or share your opinion in the comments!

Source: DP Review

NIce video review of honor 10 by that lady.

Hi I sent you a couple of emails, but got no reply. I'd like to challenge your assertion and say that the IMX586 is capable of full 48mp images. To achieve this, you slip one row and one column of pixels and then group the 4 neighbouring pixels to form a standard Bayer RGGB array like this:

https: //eskerahn.dk/wordpress/wp-content/uploads/2018/07/IMX586.png

I believe this is called array conversion which is supported on the IMX586 chip.

so for good lighting, you get 48MP and for low light, it pixel bins down to 12MP to increase sensitivity. What do you think?

Agreed, and Asus 5Z which has IMX363 sensor has balanced performance in medium budget segment and clicks some nice photographs can be seen on youtube or other reviews.

The thing that really bothers me about the "more is better" is that a GOOD 8mp sensor, can produce a good quality A3 (11x17) print. Who prints anymore (except me, I'm in the photocopy/printer/large format business along with being an avid photographer). MORE megapixels, equates to a sharper image that shows up in the smaller detail, AND especially when zooming in and cropping. You don't lose the detail as much. The other issue I have with stuffing more and more image sensors on such a TINY substrate. When you do, you run into the possibility of increasing the signal to noise ratio, which results in crosstalk. That can inhibit a good photo because the software will attempt to knock it down (sparkles in lower light photos). This can flatten the photo and take some of the "punch" out of a photo.

Instead of stuffing more sensors, I'd rather they make the sensor LARGER, and make each individual sensor LARGER, to capture more of the available light. Along with that, a LARGER hunk of glass on the front, to capture the available light. But, THAT won't happen because the fashion industry dictates how smartphones look, and, they want slim & stylish.

That's why I "love" marketing !... pfff...

Fully agree with what's said. What I've been thinking is that, assuming a proportional increase in image quality, more mpx would permit more effective cropping and thereby good digital telephoto. It's also about, to paraphrase Steve Ballmer, "Algorithms, Algorithms, Algorithms". Huawei examples over at DPReview haven't been all that impressive.

But has to be said the best algorithmic smartphone software is miles ahead of the clunky controls on 5-digit premium DSLRs and mirrorless cameras for most conventional situations, especially for unskilled hobby photographers. (My standing "modest proposal" for the OEMs that make both good phones and good camera bodies (e.g. Sony) is to build a competent mirrorless camera body that snaps their own phone into the back for display and navigation, and provide an API for third party app developers. I'd buy that phone and that camera.)

Very good article, by the way.

Thank you so much!

And thanks to all of you guys for going deeper on the topic, i love to read this kind of comments :)