Apple to Enable AI-Powered Eye Tracking Feature on iPhone and iPad

Apple has yet to announce iOS 18 and iPadOS 18 next month at WWDC 2024, but they're already previewing a few new accessibility features that will most likely be included in these major updates. Among the suite of new tools include an eye-tracking capability and other life-enhancing features that will arrive on iPhones and iPads later this year.

Eye-tracking for iPhones and iPads

Apple is taking a page from the eye-tracking technology used in the Vision Pro, and they're adding this to iPhones and iPads. It mentioned the feature, which relies on the front-facing camera, allows a user to control an iPhone or iPad simply by looking at a specific area in a page or app.

It added that additional hardware accessories aren't required to enable eye-tracking in these devices. However, the function will require a quick setup and calibration and will rely on on-device machine learning. The company also noted that the eye-tracking feature supports iOS and iPad apps.

This is actually not a new feature. Some prominent manufacturers like Samsung and LG already added eye-tracking technologies to their smartphones, although it is limited to certain apps like browsing and video playback.,

Listening via vibrations

Another accessibility feature is Music Haptics which is meant to aid people with hearing disabilities. When enabled, the iPhone's vibration motor will produce varying vibrations and taps that are based on the tune of a song or currently playing track.

Music Haptics is compatible with the Apple Music app, supporting millions of songs in the app, but Apple intends to release an API later this year, so developers can utilize it for third-party apps.

No more motion sickness?

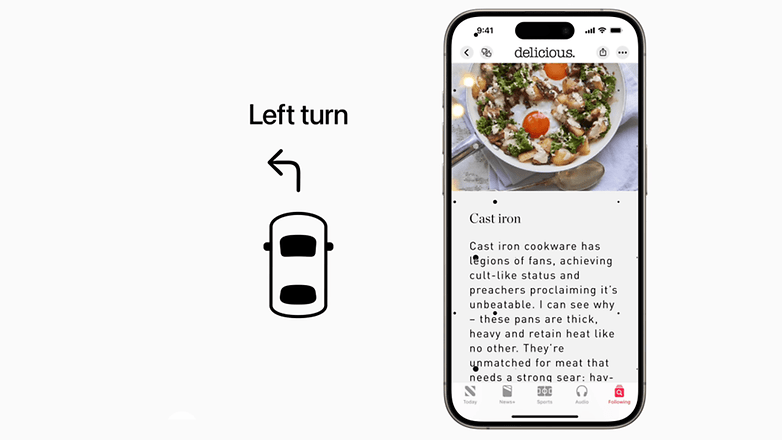

If you're someone who uses a smartphone or tablet when commuting or traveling in a vehicle, the new Vehicle Motion Cues could be a great help to reduce your motion sickness.

As the name implies, it will assist users in a moving vehicle by generating animated dots scattered on the edges of an iPhone or iPad's screen. The motion of the dots will correspond to the direction and movements of the vehicle that will be detected by the device. Apple says these will “reduce sensory conflict” that usually results in motion sickness for passengers.

Speech enhancements

Apple is tapping the power of AI with new speech enhancements it will add in iOS 18. This includes Vocal Shortcuts that allows users to assign custom utterances that will be used to improve voice control with Siri, while the new Listen for Atypical Speech will rely on machine learning to better recognize such speech patterns. Both were essentially designed for users with speech problems affected by conditions like stroke and ALS.

Voice control and sound recognition for CarPlay

Two of the vital accessibility features on iPhones and iPads are arriving on CarPlay as well. Voice control will provide riders hands-free control for apps and features on CarPlay while sound recognition will generate visual alerts on the car's display for car horns and sirens.

Beyond these changes, there are other vital improvements planned to be added via software updates such as a Reader mode and support to launch detection mode via Action Button for Magnifier. There is also a new Virtual Trackpad that will arrive with the AssistiveTouch feature.

On a separate note, Vision Pro will receive LiveCaption that will project dialogue and transcription for audio apps and during FaceTime calls.

It is expected that many of these new accessibility tools will arrive via iOS 18 and iPadOS 18 updates that will be shipped to compatible iPhones and iPads this fall. Which among these additions are your favorite and think is the most essential?

Source: Apple