Apple Adds Live Speech and AI-Based Voice Generation in iOS 17

Apple is previewing several iOS 17 features that are focused on enhancing speech, vision, and cognitive accessibility on iPhones and iPads. Among the improvements are text-to-speech and AI-based personal voice generation, which are expected to roll out alongside Assistive Access later this year.

Apple continues to build on existing accessibility features and introduced new capabilities simultaneously. Both Live Speech and Assistive Access are entirely new functions while Point and Speak is an update to Apple's built-in Magnifier app that also received Door Detection in iOS 16 last year.

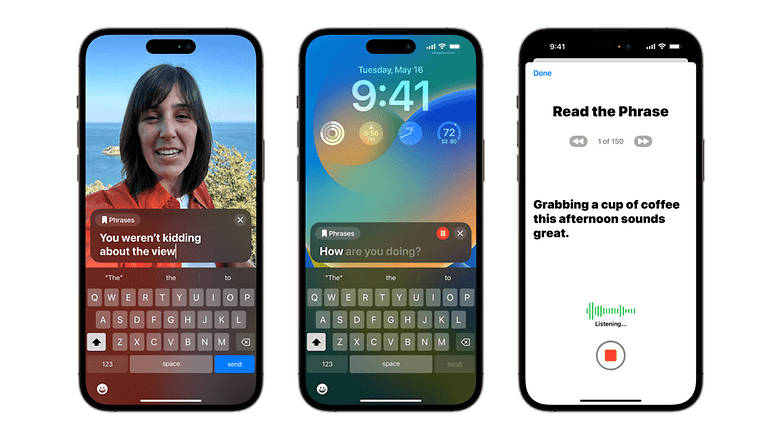

Live Speech and voice generation comes to iOS 17

The Live Speech feature is the iPhone manufacturer's version of text-to-speech that will be integrated in FaceTime and regular phone calls. Apple claims users can type in text and the feature will read it aloud to the person or group they're in the call with. Apple claimed it will arrive on iPhones, iPad, Macs, and MacBooks.

Meanwhile, the other speech-related functionality is Personal Voice, which relies on machine learning on the particular device. It lets users create or synthesize their voice by reading prompted texts for 15 minutes. This will come in handy for those who are at risk of losing their voice due to conditions like ALS or amyotrophic lateral sclerosis. The technology behind it resembles Amazon's AI voice generation which was announced last year.

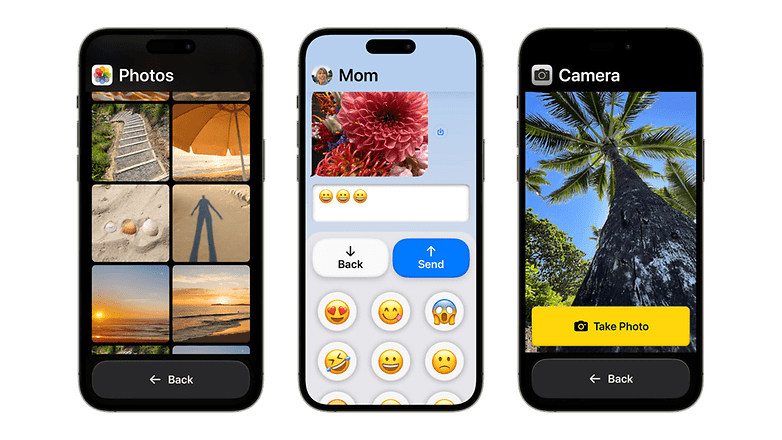

Assistive Access simplifies the Apple app experience

Apple's new Assistive Access provides a customized app experience. Rather than expanding controls and input options to apps, the feature simplifies the use of these apps for senior citizens and people who have cognitive and visual disabilities by offering enlarged buttons and text. It supports apps like the main camera, messages, and phone as well as Apple Music and Photos.

Magnifier gets Point and Speak

In addition to detecting people, doors, and reading out image descriptions, the Magnifier on iPhones can now identify more objects via Point and Speak. This feature should provide better help to visually impaired individuals. It works with short labels that are found in appliances. For example, a user can point at a button on a microwave and the app will read the said text out.

There are also other accessibility-related updates and features. For those who have motor disabilities, Apple's Switch Control will turn a switch into a virtual game controller. Furthermore, Apple mentioned that hearing aid accessories made for iPhones will now be compatible with Mac devices. Both Voice Control and Voice Over via Siri will receive phonetic suggestions and speed customizations, respectively.

Apple didn't confirm whether all of these improvements will debut in iOS 17 or separately as a minor update within the next software iteration. Likewise, we want to know which features do you find to be most helpful?

Source: Apple