Deepfakes for Webcams is Here: It's Even Harder to Spot AI

The recent advancements in AI have not just brought more sophisticated and smarter chatbots but have also brought significant challenges, particularly with the rise of deepfakes. While many of those AI renders are post-generated, the new one takes it a notch with a real-time AI face swap that can be utilized during video calls.

A new AI project called Deep-Live-Cam has recently gained traction online for the part of its feature to apply deepfakes on webcams. At the same time, this has sparked discussions about the possible security hazards and ethical implications it poses.

How Deep-Live-Cam is different from other deepfake programs

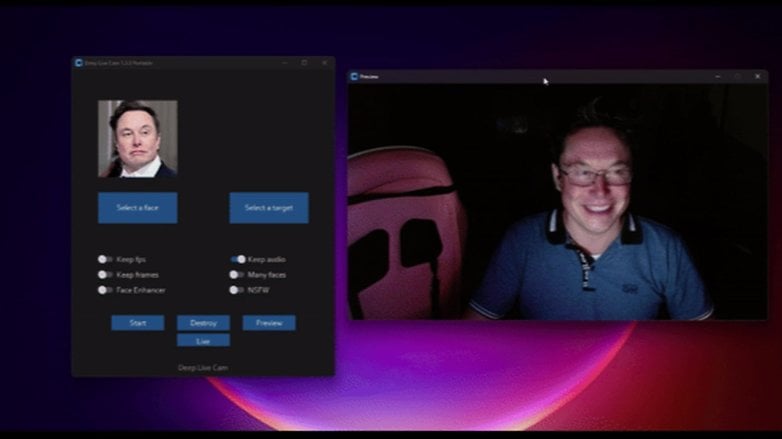

Essentially, Deep-Live-Cam uses advanced AI algorithms that can take a single source photo and apply the face to a target during live video calls, such as on webcams. While the project is still under development, the initial tests already show both concerning and impressive results.

As further described on Ars Technica, the application first reads and detects faces from a source and a target subject. It then uses an inswapper model to swap the faces in realtime while another model enhances the quality of the faces and adds effects that adapt to changing lighting conditions and facial expressions. This sophisticated process ensures that the end product is highly realistic and not easily recognizable as a fake.

For example, one of the clips shared by a developer showed a realistic fusion of Tesla’s CEO Elon Musk’s face onto a subject. The deepfake even included an overlay of prescription glasses and the subject’s hair, making it incredibly convincing. Another example shown was one with the face of US vice presidential candidate JD Vance and Meta's Mark Zuckerberg.

Should you worry about the rise of AI simulation apps?

So, why is this specifically concerning? The use of Deel-Live-Cam and other real-time deepfake apps raises serious concerns about privacy and security. Imagine that a picture of you can be grabbed from the internet and utilized for fraud, deception, and other malicious activities without your permission.

Right now, it is seen that the shortcomings can be addressed in a few ways like including watermarks when using the app and robust detection methods. The solution can also be applied to other deepfake programs and apps.

The interest in the tool quickly took it to the list of trending projects at GitHub.

What are your thoughts on real-time AI simulation apps? Do you have practices to share on how to protect yourself from these potential risks? We'd like to hear your answers in the comments.

Via: Arstechnica

amazing