Facebook and Messenger for Teens are Back—This Time, Meta’s Making It Safer

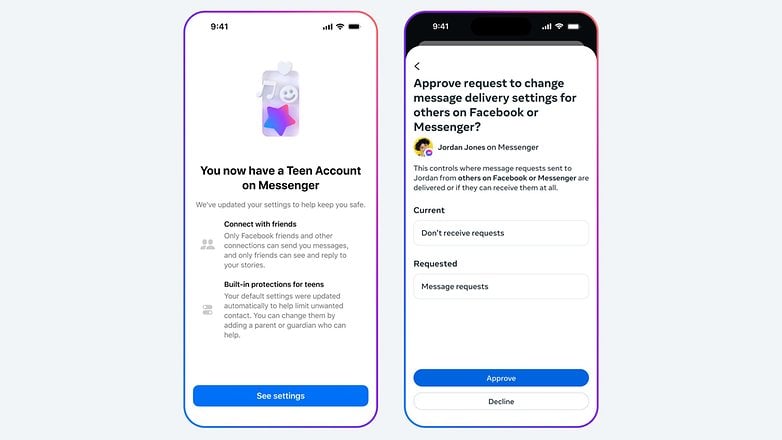

Teens who were previously restricted from using Facebook and Messenger now have the option to return, thanks to Meta's new Teens Account feature. The company announced that it is expanding this safety-focused tool, previously introduced on Instagram, to its core social and messaging platforms. The rollout begins in select countries, including the USA and Canada.

Last year, Meta launched Teens Accounts on Instagram, targeting users aged 13 to 15. These accounts come with built-in protections, such as parental controls and limitations on who teens can contact and what content they can access. Now, the same safeguards are arriving on Facebook and Messenger.

- Want a digital detox? Read on how to log out of Facebook Messenger

Parental Controls and Well-being Features

With a Teens Account, young users will only be able to message and interact with people already in their contacts. Additionally, only individuals they have previously followed or messaged will be able to contact them. This restriction also applies to mentions and tags, while stories will be visible only to their friends.

Although teens can adjust some privacy settings, any changes will require guardian approval before they take effect. The Teens Account also promotes healthy digital habits, including reminders to take breaks after one hour of usage and enabling Quiet Mode during nighttime hours.

Parents or guardians will also gain visibility into how their child uses the platform. They can monitor screen time, view their teen's friend list, and manage various safety settings—all designed to create a safer online experience for younger users.

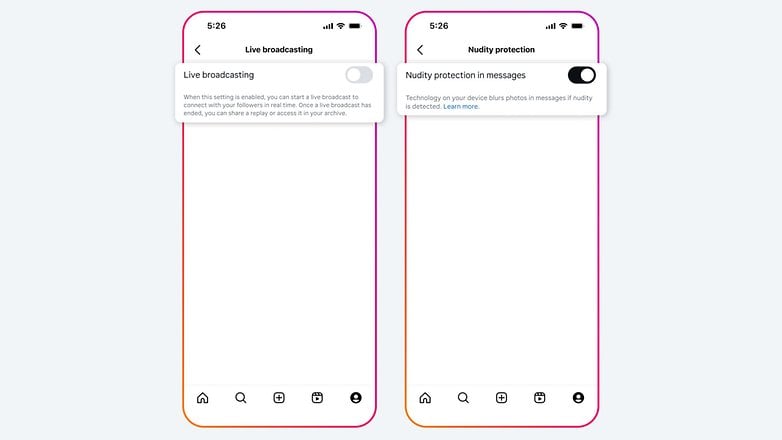

Alongside the Facebook and Messenger updates, Meta is introducing new safety features on Instagram. For example, teens under 16 will be restricted from using Instagram Live by default—they’ll need parental permission to access the feature. In direct messages, teens will not be able to disable the automatic blurring of suspected explicit or nude content unless approved by a parent.

Meta says these features will first become available in the USA, Canada, Australia, and the UK, with a broader global rollout expected in the near future. The updated Instagram safety tools are scheduled to arrive this spring.

These changes come as Meta faces increased regulatory scrutiny, with critics alleging that the company hasn’t done enough to protect teens from excessive screen time and the mental health risks associated with social media. Meta says these updates are part of its broader commitment to addressing these concerns proactively.

What do you think about Meta’s new teen safety tools? Do you feel they go far enough to protect young users online? Let us know in the comments below.

Source: Meta