Machine learning and AI: How smartphones get even smarter

Artificial intelligence (AI) and machine learning (ML): these keywords have become the major trend in 2017/18 and some smartphones now even have their own AI chip, which according to manufacturers is meant to make smartphones actually smart. But what is just hype and in what ways are these buzzwords actually substantiated? We take a closer look at the fields of application and the chips to separate facts from fiction.

When Huawei presented the first smartphone processor with an AI unit at the IFA with the Kirin 970 from its subsidiary Hisilicon, the Chinese company captured the public's attention. The Neural Processing Unit (NPU) on the SoC is specifically responsible for the functions that can be performed faster and better with AI than with a traditional processor. At present, this mainly includes functions related to photography, image recognition and processing.

First of all, artificial intelligence is the wrong term: considering today's so-called AI applications, at the core they're really using machine learning. A convolutional neural network (CNN) learns to recognize patterns on the basis of a large amount of raw data—completely independently. This training usually takes place on large server farms because it requires an immense amount of data. A machine learning application can then apply the patterns trained or learned in the first step to more data—this step is also described with the technical term ‘inference’. For smartphones, the latter step is most relevant. A trained neural network can, for example, recognize objects or faces in an image.

In general, a classic CPU can also do this same computation. The individual arithmetic operations are rather simple, but there are so many of them that they also require an octa-core processor. More cores are therefore the key to success, and they don't have to be able to do that much: a chip with a large number of small processors can run machine learning applications more efficiently than a standard processor.

Graphics chips are made for machine learning

Where do you find this many cores? Exactly, in the graphics chip, or the GPU. This is of course also required for standard tasks, even in everyday smartphone use. That's why NPU units are constructed in a similar way to an additional GPU: enormous amounts of computational cores process the numerous operations all at once, which leads to great performance advantages in machine learning applications.

And why do you even need it in a smartphone? These computations could also be carried out on the large, powerful servers in the data center and then data can be exchanged via the cloud. Sure, they could, but the cloud has known disadvantages that also apply to machine learning. It can only be used reliably with a stable internet connection and data transmission would quickly lead to unacceptable delays. The camera app, for example, would have to constantly load preview images onto the server in order to recognize the content of an image. And that shows another massive disadvantage: users would be skeptical about data protection.

There is therefore no alternative to on-device machine learning for many applications.

What are smartphones currently doing with the chip?

There are currently several smartphones that already have an NPU. You may even already have one. This is because heterogeneous computing is used in many current Qualcomm chipsets.

This makes it possible to perform a task with the best chips in the smartphone. There are a lot of them: the classic CPU, the GPU and the signal processor. All these processors have different strengths. The CPU scores particularly well with complicated computing that cannot be executed side by side, while the GPU can accelerate computing that can be carried out simultaneously. And the signal processor is optimized for real-time computing. Machine learning applications are therefore always processed by the most suitable processor.

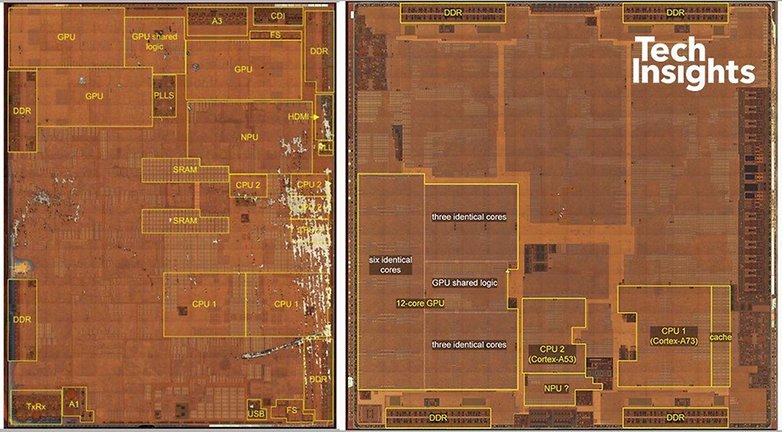

We already mentioned the Kirin 970 with an NPU. Shortly following this processor, Apple presented the new iPhones and the A11 chip is of course on board with its NPU, which is used by FaceID. There are no concrete fields of application outside of FaceID that are known, but this is likely to change quickly in the near future. The picture above shows that the NPU in Kirin 970 is quite small (bottom center), whereas Apple reserves quite a large part of the chip for the NPU (top right).

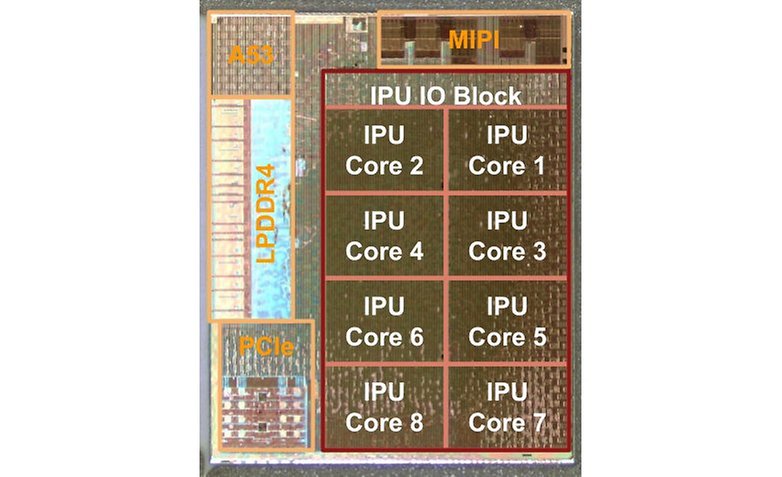

In the Google Pixel 2 (XL), on the other hand, there is the Pixel Visual Core, which supposedly can perform three trillion operations per second! The Visual Core is a classic NPU, but with a changeable history. The chip was initially unused before it was activated with Android 8.1, but only so that third-party apps are able to use HDR+. The standard camera app doesn't use the Visual Core.

Starting with Android 8.1 there is the Neural Networks API (NN-API) interface, with which app developers can access NPUs without knowing the technical specifications of the respective implementation. The CPU is used as a fallback if there is no NPU installed in the smartphone. Before that, access was only possible via manufacturer-specific routines. In fact, in the Pixel the only pathway is via the NN-API. Google also introduced an abstract library that fully supports the NN-API: Tensorflow Lite. This library allows you to use trained neural networks on smartphones and could soon become standard in many Android apps.

Huawei uses machine learning for four apps

In the two technically largely identical smartphones Huawei Mate 10 Pro and Honor View 10, the Chinese manufacturer takes advantage of the NPU in three places. The first and most visible point for the user is the scene recognition feature when taking photos, which adapts the camera parameters to the subject in front of the lens. A small icon in the app indicates what the camera app has detected in front of the camera thanks to machine learning.

Secondly, Huawei uses the NPU in Kirin 970 to better understand and predict the user's usage behavior. In this way, resources can be better allocated and energy efficiency can be improved, at least on paper. Although the manufacturer makes promises like this, they can hardly be validated, because it would require two test devices, with one of them having a deactivated NPU.

The third purpose is a cooperation with Microsoft. The translation app from Microsoft, which is pre-installed on smartphones, uses the NPU for faster translations, which in principle also works offline.

CNNs have been shown to be particularly well suited for the noise reduction of audio signals. For instance, a trained neural network can serve as a noise filter in noisy environments and thus increases the detection of voice commands. Hisilicon says detection security could increase from 80 to 92 percent in difficult environments. The necessary computing can only realistically be carried out by an NPU.

Performance comparison of AI chips

Three available smartphones already have a Neural Processing Unit on board, and potentially many more will as a result of Qualcomm's heterogeneous computing approach. You might start to wonder: which NPU is faster? To a certain extent, this goes hand in hand with the question of which NPU has lower power consumption when performing the same task.

These questions are not so easy to answer due to the peculiarities of NPUs. After all, there are some early indications that have emerged from the Chinese benchmark suite Master Lu. Anandtech tested a Mate 10 Pro, Google Pixel 2 XL and LG V30.

In the Mate 10 Pro, the NPU was used on a regular basis and the LG V30 was able to perform heterogeneous computing on a Snapdragon 835. In contrast, Pixel 2 XL does not support Qualcomm technology and the benchmark doesn’t support the NN-API. Pixel 2 therefore provides values for machine learning applications purely supported by the CPU.

And the results aren’t so great. Master Lu derives values in frames per second. While Pixel 2 processes 0.7 frames per second in the first test with the CPU, the LG V30 with Qualcomm's heterogeneous computing achieves 5.3 fps with Qualcomm's heterogeneous computing. The Mate 10 Pro with NPU achieves 15.1 fps. The NPU and Qualcomm's approach are similar in terms of power consumption: the Mate 10 Pro requires 106 millijoules (mJ) per inference and the V30 requires slightly more at 130 mJ. The Pixel without machine learning acceleration consumes 5,847 mJ. All three of these values are according to Anandtech's measurements. If you are interested in further technical details, please visit Anandtech’s site.

For our sake, it is clear that the specialized processors for machine learning make a huge difference when they are used. Image recognition, noise reduction, improved voice recognition: these are all applications that could potentially benefit from NPU. Although Qualcomm's approach to the NPU with the Kirin 970 is inferior, the Snapdragon 845 is likely to change that: its signal processor is said to act around three times faster and could therefore close the gap with the Huawei NPU.

A smartphone with an NPU — do I really need one?

Let's not kid ourselves: with the current state of machine learning applications, you can still live very well without it. The functions that actually demonstrate the power of an NPU or special adaptations such as the new Snapdragon processors are important, but are still manageable without an upgrade.

But this can change faster than you think, since all these functions are nothing but software. Any kind of update could easily be distributed to smartphones that are already equipped with the necessary hardware. App developers will soon be able to access NPU-style processors using the NN-API and Tensorflow Lite. So anyone who already owns a smartphone with an NPU should be among the first to be able to use these new functions— the dream of any early adopter.

What is your opinion of machine learning on smartphones? Are you already looking out for an NPU? Or are machine learning features not important to you? Let us know in the comments!

Source: Anandtech, TechInsights, TechInsights

With the development of AI programs and increased chip performance, the next level of AI domain development will be that of hardware implementation: smart chips. And the possibilities of using these smart chips are very wide.