Exmor, Isocell & Co: why smartphone image sensors are so important!

While smartphone manufacturers are eagerly ramping up the number of megapixels in their cameras, entering three-digit megapixel figures while touting fantasy level zoom on their devices, a far more important camera feature remains pretty much an unknown quantity to many: the sensor size. Why this is so crucial for image quality? Find out more below.

Contents:

- Sensor size in inches: From analog tubes to CMOS chips

- Effective sensor area: Larger is better

- Bayer Mask & Co.: Now it's getting colorful

- Camera software: Algorithm is everything

- Autofocus: PDAF, 2x2 OCL & Co.

Sensor size in inches: From analog tubes to CMOS chips

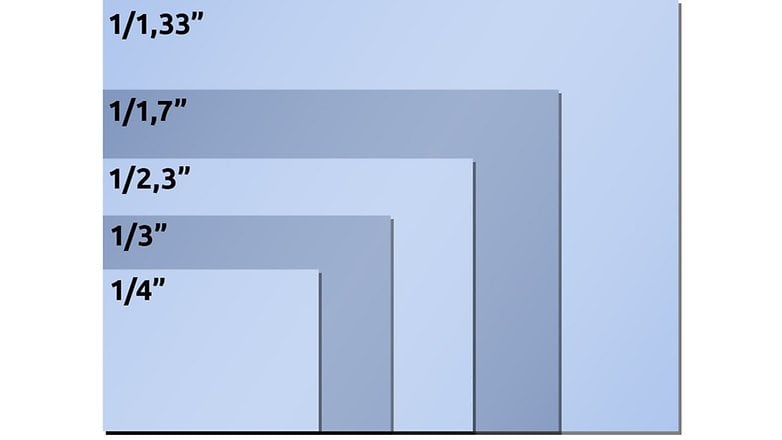

Let's start with some background information: In most smartphone specification sheets, the sensor size of the camera tends to be written down as 1/xyz inch: for example 1/1.72 inch or 1/2 inch. Unfortunately, this size does not correspond to the actual size of your smartphone sensor.

Let's have a look at the IMX586 specifications sheet: Half an inch of this sensor would actually correspond to 1.27 centimeters. The real size of the Sony IMX586 doesn't have much to do with that, though. If we multiply the 0.8-micron pixel size by the horizontal resolution of 8,000 pixels, we end up with only 6.4 millimeters, i.e. approximately half the number. If we first measure the horizontal and then apply the Pythagoras theorem to obtain the diagonal measurement, we end up with 8.0 millimeters. Even that is not nearly enough.

And here's the crux of the matter: Customisations were introduced about half a century ago, when video cameras still used vacuum tubes as image converters. Marketing departments still retain the then approximate light-sensitive area to tube diameter ratio with much vigor, almost to the point of a relentless barrage of penile extension emails sent to my spam folder. And that's the name of a CMOS chip with a diagonal measurement of 0.31-inches these days in a 1/2-inch sensor.

If you want to know the actual size of an image sensor, either take a look at the manufacturer's datasheet or the detailed Wikipedia page on image sensor sizes. Or you follow the example above and multiply the pixel size by the horizontal or vertical resolution.

Effective sensor area: Larger is better

Why is the sensor size so important? Imagine the amount of light coming through the lens onto the sensor as though rain is falling from the sky. You now have a tenth of a second to estimate the amount of water that is currently falling.

- With a shot glass, this will be relatively difficult for you, but in a tenth of a second, in heavy rain, perhaps a few drops will fall into the glass - with little rain or with a little bad luck, perhaps none at all. Your estimation will be very inaccurate either way.

- Now imagine you have a paddling pool to perform the same task. With this, you can easily catch a few hundred to a few thousand raindrops, and courtesy of the surface, you can make a more precise estimate on the amount of rain.

Just like with the shot glass, paddling pool, and rain example, the same applies to sensor sizes and the amount of light, be it much or little. The darker it is, the fewer photons are captured by the light converters - and the less accurate the measurement result. These inaccuracies later manifest themselves in errors such as image noise, broken colors, etc.

Granted: the difference in smartphone image sensors is not quite as great as that between a shot glass and a paddling pool. But the aforementioned Sony IMX586 in the Samsung Galaxy S20 Ultra's telephoto camera is about four times larger in terms of area size than the 1/4.4-inch sensor in the Xiaomi Mi Note 10's telephoto camera.

When it comes to zoom: Whether the manufacturer writes "100x" or "10x" on the exterior is somewhat equivalent to the chest-thumping denoted by the speedometer in a car. A VW Golf with a speedometer that hits 360 km/h will still not go faster than a Ferrari. However, this is a topic that should be explored in a different article.

Bayer Mask & Co.: now it gets colorful

Let us revisit the water analogy from above. If we would now place a grid of 4,000 by 3,000 buckets on a meadow, we could determine with a resolution of 12-megapixels the amount of rainfall and probably take some kind of picture of the water saturation from the overhead rain cloud.

However, if an image sensor with 12-megapixels were to capture the amount of light with its 4,000 by 3,000 photon traps, the resulting photo would be black and white - because we only measured the absolute amount of light. We can neither distinguish the colors nor the size of the falling raindrops. How does the black and white end up as a color photo?

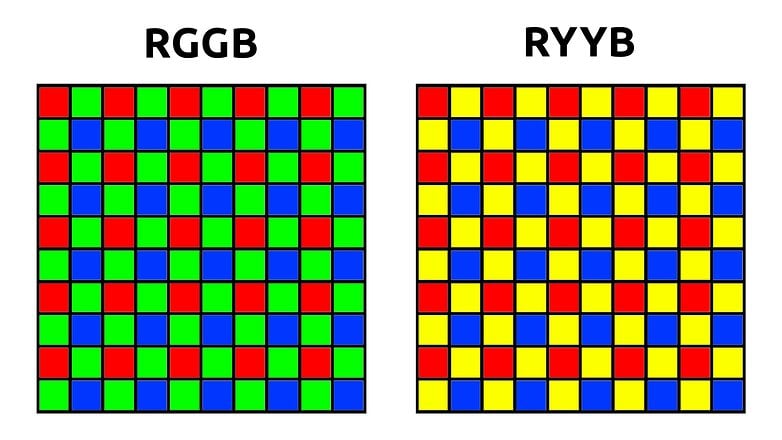

The trick is to place a color mask over the sensor, the so-called Bayer matrix. This ensures that either only red, blue, or green light reaches the pixels. With the classic Bayer matrix that has an RGGB layout, a 12-megapixel sensor then has six million green pixels and three million red and blue pixels each.

In order to generate an image with twelve million RGB pixels from this data, the image processing typically uses the green pixels for demosaicing. Using the surrounding red and blue pixels, the algorithm then calculates the RGB value for these pixels - which is a very simplified explanation. In practice, the demosaicing algorithms are far smarter, for example, in order to avoid color fringes on sharp edges, then the same principle follows with the red and blue pixels, where a colorful photo finally ends up in the internal memory of your smartphone.

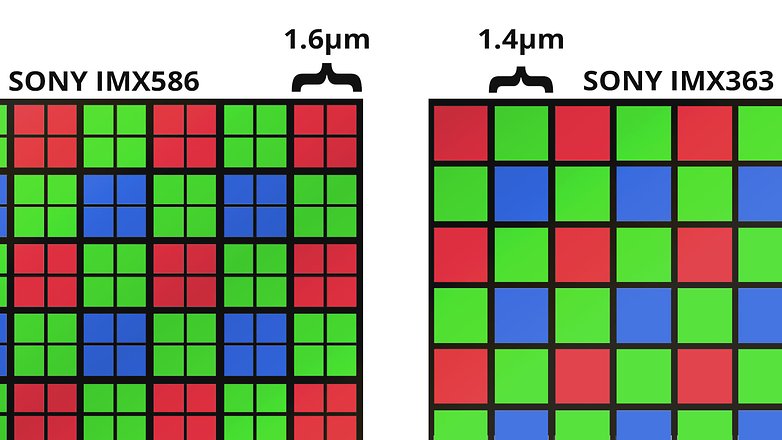

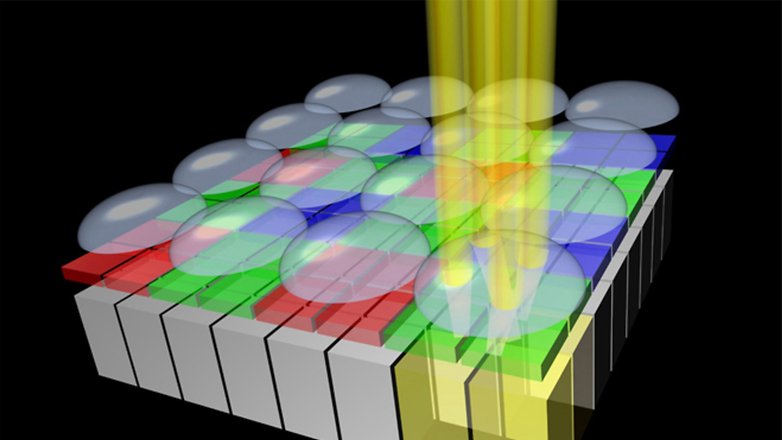

Quad Bayer and Tetracell

Whether it is 48, 64, or 108 megapixels, most of the current extremely high-resolution sensors in smartphones have one thing in common. While the sensor itself actually has 108 million buckets of water or light sensors, the Bayer mask above it has a lower resolution by a factor of four. So there are four pixels under each color filter.

This, of course, looks incredibly great when specified on the datasheet. A 48-megapixel sensor! 108-megapixels! It is just ballooned figures to titillate the imagination. And when it's dark, the tiny pixels can be combined to form big superpixels while delivering great night shots.

Paradoxically, however, many cheaper smartphones don't offer the possibility of taking 48-megapixel photos at all - or even provide poorer image quality in direct comparison when in 12-megapixel mode. In all cases known to me, the smartphones are so much slower when taking pictures with a maximum resolution that the slight increase in quality is simply not worth the amount of time taken - especially since 12, 16 or 27-megapixels are completely sufficient for everyday use and do not exhaust your device's memory so quickly.

Most of the marketing propaganda that pushes tens of megapixels into tripe digit territory may be ignored. But in practice, high-resolution sensors are actually larger - and the image quality ends up with noticeable benefits.

Huawei's SuperSpectrum sensor: the same in yellow

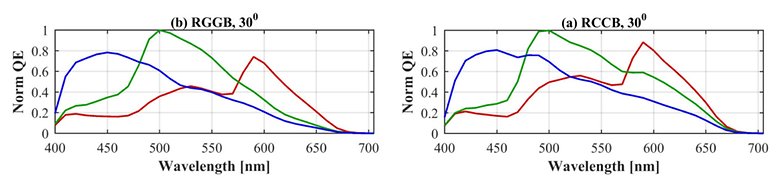

There are also some derivatives of the Bayer matrix, the most prominent example being Huawei's so-called RYYB matrix (see graphic above), in which the absorption spectrum of the green pixels is shifted to yellow. This has - at least on paper - the advantage that more light is absorbed and more photons arrive at the sensor in the dark.

However, the wavelengths measured by the sensor are no longer as evenly distributed in the spectrum and as clearly separated from each other as compared to an RGGB sensor. In order to maintain accurate color reproduction levels, the demands on the algorithms, which must subsequently interpolate the RGB color values, will have to increase in a corresponding manner.

It is not possible to predict which approach will produce better photos in the end. This largely depends on the practical and laboratory tests that tend to prove one or the other technology 'better'.

Camera software: the algorithm is everything

Finally, I would like to say a few words about the algorithms that are involved. This is all the more important in the age of Computational Photography, where the concept of photography becomes blurred. Is an image composed of twelve individual photographs really still a photograph in the original sense?

What is certain is this: the influence of the image processing algorithms is far greater than the number of bits in a sensor area. Yes, an area difference by a factor of two makes a big difference. But a good algorithm also makes up a lot of ground. Sony, the world market leader in sensors, is a good example of this. Although most image sensors (at least technologically) hail from Japan, Xperia smartphones always lagged behind the competition in terms of image quality. Japan can do hardware, but when it comes to software, the others are more advanced.

At this point, I would like to make a note about ISO sensitivity, which also deserves its own article: Please never be impressed by ISO numbers. Image sensors have a single native ISO sensitivity in almost all cases*, which is very rarely listed down in datasheets. The ISO values that the photographer or camera automation set during the actual shooting process are more like gain - a degree "brightness control". How "long" the scale of this brightness control is, can be defined freely, so writing a value like ISO 409,600 into the datasheet makes as much sense as with a VW Golf... well, let's not venture down that road.

*There are actually a few dual ISO sensors with two native sensitivities in the camera market, such as the Sony IMX689 in the Oppo Find X2 Pro, at least that's what Oppo claims. Otherwise, this topic is more likely to be found in professional cameras like the BMPCC 6K.

Autofocus: PDAF, 2x2 OCL & Co.

A small digression at the end, which is directly related to the image sensor: the whole subject of autofocus. In the past, smartphones determined the correct focus via contrast autofocus. This is a slow and computationally intensive edge detection, which you probably know from the annoying focus pumping.

Most image sensors have now integrated a so-called phase comparison autofocus, also known as PDAF. The PDAF has special autofocus pixels built into the sensor, which are divided into two halves and compare the phases of the incident light and calculate the distance to the subject. The disadvantage of this technology is that the image sensor is "blind" at these points - and these blind focus pixels can affect up to three percent of the area, depending on the sensor.

Just a reminder: less surface area translates to less light/water and subsequently, poorer image quality. Moreover, the algorithms have to retouch these flaws just like how your brain has to make sense of the "blind spot".

However, there is a more elegant approach that does not render pixels unusable. The micro-lenses already present on the sensor are distributed over several pixels in places. Sony, for example, calls this 2x2 OCL or 2x1 OCL, depending on whether the microlenses combine four or two pixels.

We'll soon be devoting a separate, more detailed article to autofocus - what do you look out for in a camera when you buy a new smartphone? And which topics around mobile photography would you like to read more about? I look forward to your comments!

More articles about smartphone cameras and photography:

I have to disagree with you on Sony smartphone camera's, Xperia smartphones from the past could take photo's as good as any other manufacturer, any day. It is the person that is using the camera is at fault, this is the way to put it. Other smartphone camera's were built for children to use, Sony was built for adults to use

-

Admin

-

Staff

Jun 26, 2020 Link to commentI agree to some degree. Earlier on, Sony's auto modes were lacking behind the rivals, but that was nothing that couldn't be fixed with some exposure controlling or usage of the manual modes.

But in the times of computational photography, where Software matters more and more, Sony is lagging more and more behind. We're expecting the Xperia 1 II currently, and we'll be testing its camera in detail and compare it to its rivals – stay tuned ;)

I just wish they would drop all the multiple sensor garbage, and just be done with it, and use ONE larger sensor, along the line of APS-C size or similar, which would be 6-10 times larger than most smartphone sensors. But, the retractable lens and camera bump on the back, would cause panic attacks to the "stylish & colorful crowd".

What lot of people don't understand is that a camera sensors job, it to capture as much available light as possible. Hard to do without software "tricks" when you have super tiny smartphone sensors. Adding megapixels is in theory, good when you zoom & crop, and allows you to lose detail after zooming in. Stuffing bazillions of super tiny sensors that close together, also creates another issue, in the case of lower than perfect light conditions. The signal gain is so high, the crosstalk envelope goes up, requiring the camera software to try to knock down the "noise" (the sparkles in a low light photo), then, try to use software to bring back the detail, which can result in a "muddy" looking photo.