After Testing the Apple Vision Pro, This Feature Stands Out as My Favorite

Why isn't anyone talking about this feature of the Apple Vision Pro? No, I'm not referring to porn, even though studies in the US and UK showed that adult content was the number one reason for buying VR glasses for 67% of VR glasses owners. Rather, the feature I want to talk about is Apple Immersive. What is it? Why is it so good? And what does Apple do better here than other VR glasses manufacturers? There are at least five things that come to mind!

Apple Immersive is a video format specifically developed for the Apple Vision Pro. Unlike other VR formats, it addresses key issues in VR video content, leveraging Apple's extensive market reach to set itself apart.

Of course, professionally produced content is still rare and the Vision Pro also suffers from the proverbial chicken-and-egg question as with any new platform. The few hours of video production available in Apple Immersive hardly justify the $3,500 asking price. What is remarkable, however, is how Apple handles the content problem on its own from start to finish.

Logically, the Apple Vision Pro will work with Apple's in-house streaming service, Apple TV+. Content comes (among other things) from Apple's own production company called Apple Studios. Yes, as we have known for a few days now, Apple even builds its own cameras for immersive content, as the manufacturer casually mentioned in its press release for the first Apple immersive film.

Sports on the Apple Vision Pro

In addition to running its own production company, Apple also partners with dozens of other companies. The demo material already available on Apple TV+ includes an incredibly impressive NFL compilation with cool game scenes and close-ups of behind-the-scenes footage that takes you right into the dressing room with the stars.

This is no joke at all, as it will revolutionize the sports experience for many. As my colleague and huge soccer fan Carsten Drees said in his podcast: “Before I watch a soccer match on a bad pitch in the stadium, I'd rather watch it with these glasses.”

Speaking of soccer, here is an example in Europe—where I live. Apple is already partnering with the German Football League. The Supercup was produced as a 10K livestream in collaboration with Softseed, a Berlin start-up. Video input was sourced from eight cameras and in 80K resolution, so to speak. The challenge? Video data volume of around 1 TB per minute and a "glass to glass" delay of 35 seconds, i.e. from the camera lens in the stadium to the VR glasses on the sofa.

As for the French developer immersiv.io, the DFL has already shown a prototype of how we might watch soccer matches in the future, even if this example is less immersive (among other things) and more informative.

Film and series productions along with the know-how

Of course, sporting events are not the only content Apple is exploring. Apple Studios has just completed Submerged, the first Apple Studios production of a short film, which was directed by Oscar-winning filmmaker Edward Berger. This is exciting news for the entire industry: VR productions like this bring with them entirely new challenges, problems but also opportunities.

An interesting quote was made in the ProAV TV live stream with Craig Heffernan from camera and editing software manufacturer BlackMagic: VR films are more suited for theater than cinema. Rather than viewing a scene through a static or moving window—like a camera lens that directs their gaze—the audience is placed directly within the scene. This immersive setup lets them freely choose where to look.

So, how do you direct your audience's attention in a horror movie? New techniques with light are required here, although the other option would be to use sound. This is also another area we will talk about later concerning the Apple Vision Pro.

In Submerged, this potential banana skin was largely solved using depth of field, which bothered me a little at times. Unlike watching a TV movie, I felt more like an active participant, able to shift my focus to out-of-focus areas with my own eyes. This immersive experience extended to sound as well—for instance, a submarine passing overhead, accompanied by a deep, bass-heavy rumble.

Of course, there is the challenge of having a tidy set. Where should the light go? Where are the microphones for the actors? Even fast camera movements are not possible without giving the audience motion sickness. BlackMagic's Davinci Resolve video editing software automatically recognizes fast movements in the new Apple Immersive workflow, which could result in nausea among the audience.

In any case, it is clear that Apple has extended its 1,000 arms in all directions. It is in the process of forging partnerships, accumulating know-how, and simply starting out in a wholly different direction than we have seen with existing VR content to date.

The Apple Vision Pro codec

What else is special? Sure, I've seen enough VR content, and I'm sure some of you have, too. However, why the heck is Apple's video content so crazy sharp? The answer lies not (only) in the production quality itself, but also in the video codec and in the so-called projection. What is that anyway?

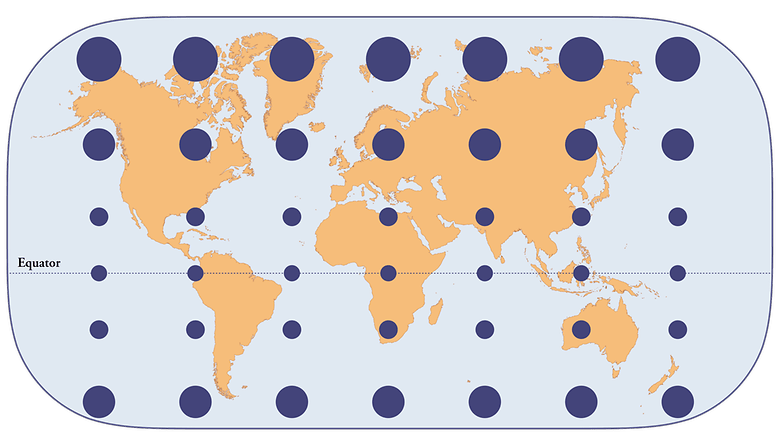

A video that you watch on your TV is stored in two dimensions, just like what you get on the TV screen. What about a VR video where you can look around? With 360-degree content, you can imagine the video as a sphere with your head in the center. The video then runs on the inside of this sphere. With 180-degree content, this is mathematically speaking, a hemisphere.

To pack this spherical video into a video format that is still two-dimensional, a projection is required just as the globe for a 2D atlas is turned into a two-dimensional world map using the Mercator projection method. The challenge? Just as the country sizes on the two-dimensional world map do not correlate with the actual sizes of the countries, not every image area is represented accordingly, and therefore, does not have the same resolution or quality.

Apple uses a unique projection method that even VR experts and developers like Mike Swanson or Anthony Maës have only partially decoded so far. Due to DRM and content encryption, the video cannot be easily viewed. It appears Apple’s projection technique includes an unusual 45-degree rotation of the video content.

The Vision Pro can really deliver 3D for everyone

Oh, and then there's the 3D factor. For content to appear three-dimensional, there must be a separate video track for each eye. This is where the HEVC codec with the suffix "MV" for multiview comes into play. Instead of saving the images for both eyes next to each other or on top of each other, these are saved in the codec as separate views.

Apple then renders the video stream tailored to the user from these two views in real time, taking into account the pupil distance of the viewer and any additional lenses used. This Dynamic Bespoke Projection is intended to solve the problem of the lens distance of the camera practically never matching the exact eye distance of the viewer. As Anthony Maës put it in his Medium article: A screenshot of the Apple immersive playback would be different for virtually every user. Anya Taylor-Joy and Harry Melling also thought alike.

The result is how VR videos in the Apple Vision Pro do not have problems with size perception that can often be observed in other models. The feeling that rooms are of the wrong size and appear far too huge, for instance, always remains in the back of the mind. This will also make it feel strange to grab things in mixed reality.

Back to the video content: image sharpness was incredibly good. Alicia Keys was depicted in the private concert for the Apple Vision Pro in an incredibly lifelike manner which other VR videos are unable to achieve currently despite their 8K resolution. The likeness to the superstar was really impressive. Unfortunately, we are unable to provide you with screenshots or video snippets of the Apple Immersive films due to DRM restrictions.

According to Apple, the video format also improved compression by a whopping 30 percent, as the content can be easily overlaid on the images, which are quite similar for both eyes. With the sometimes measly data transfer rates in certain countries in the gigantic video files at 8K per eye, 90 Hertz, and 10-bit color depth, you can be really happy about every single bit saved.

The Vision Pro is also an Audio Pro

The sound in the Vision Pro is just as impressive as the picture quality. The first time you see someone watching a movie with this thing, you'd think to yourself: Wow! That's loud, coming out of those speakers. At least that would be the case if you were to take the external sound of headphones as a reference. It is not that loud while wearing the glasses.

The exciting thing, however, is how the Apple Vision Pro works with sound. To simulate virtual reality as realistically as possible, the sound has to reach your ears exactly as it would in the scene you are watching. In other words, if you were watching a movie scene in a church, you would have clear reverberation due to the wide space and the typically bare walls. Whereas in the rather low room with curtains, such as the Alicia Keys private concert, everything would sound very close and soft.

How does the Vision Pro get sound into your ears exactly as intended, regardless of whether you're sitting in a church, in a closed room, or in your living room?

A technology called Spacial Audio Engine is used to achieve such an effect. As VRTonung reported, the Apple Vision Pro continuously scans the room with the help of integrated cameras and tries to constantly adapt the sound to your room and even its surface textures. This is done using audio ray tracing, which simulates the propagation of sound waves in rooms. Meta also recently introduced this feature in the Quest 3.

What's more, every person perceives sound differently depending on the shape of their head and ears. With a so-called Head-Related Transfer Function (HRTF), the Vision Pro also adapts the sound to your individual head shape. This is a form of computational audio at work. We already reported on HRTFs in greater detail in another context:

Apple and the camera industry

One last point as to why the Apple Vision Pro will take off in terms of video? More and more “mainstream” cameras are coming onto the market that are exclusive or at least also made for Apple Immersive. In addition to Apple's own production camera, there is the URSA Cine Immersive from Blackmagic, which should be available at the beginning of 2025 and will be targeting professionals with an estimated price of $20,000 to $30,000.

Canon also announced a special, yet unpriced Apple Immersive lens for the $1,299 EOS R7, which will be significantly cheaper. Of course, you can also record videos for Apple Immersive with the Apple Vision Pro itself or the iPhone. Incidentally, this is also the reason why the cameras are arranged next to each other on the iPhone 16 (for review).

Conclusion: The time is now

Yes, of course, you will now say: In the end, the cameras will probably be used for porn again.

That's not what I am worried because it's always been like this: the two major drivers of digital technologies are the porn industry and the military. We recently reported on how the latter uses VR goggles in our newsletter lead story on VR in the military where our team even participated in a police operation in virtual reality.

As I mentioned, after the porn industry and the military, breakthrough in other areas tend to follow at some point and I believe that the time for VR video is now.