Magic Eraser: This tool shines on Google's Pixel 6!

With the Pixel 6 armed with the power of the new Tensor SoC, Google introduced an intelligent eraser tool alongside their latest handset. What Google claims to work exclusively on the latest generation Pixels is not quite accurate, it has been around for a long time on Samsung and in professional photo editors. NextPit pits Google's Magic Eraser against Samsung and Adobe Photoshop!

In the Pixel 6, Google continues to set the course towards Artificial Intelligence and Machine Learning. For this, the search giant has even developed its own SoC known as "Tensor".

The result is said to be optimized performance, making selected features in Pixel 6 exclusive. Among other things, there's a Magic Eraser tool in the "Google Photos" app that automatically deletes unwanted elements in your photos.

The challenge with manually erasing errors in a photo as well as distracting elements is the amount of skill and work required to reconstruct the background of the image. Algorithms are capable of performing this to a certain degree, and we haven't just discovered this since the Pixel 6 because Samsung does offer an identical feature in the "Labs" section of its Gallery app.

Not only that, Adobe Photoshop also has a couple of smart erasers available for users. I've pitted all three "magic erasers" against each other, so let us get down to it!

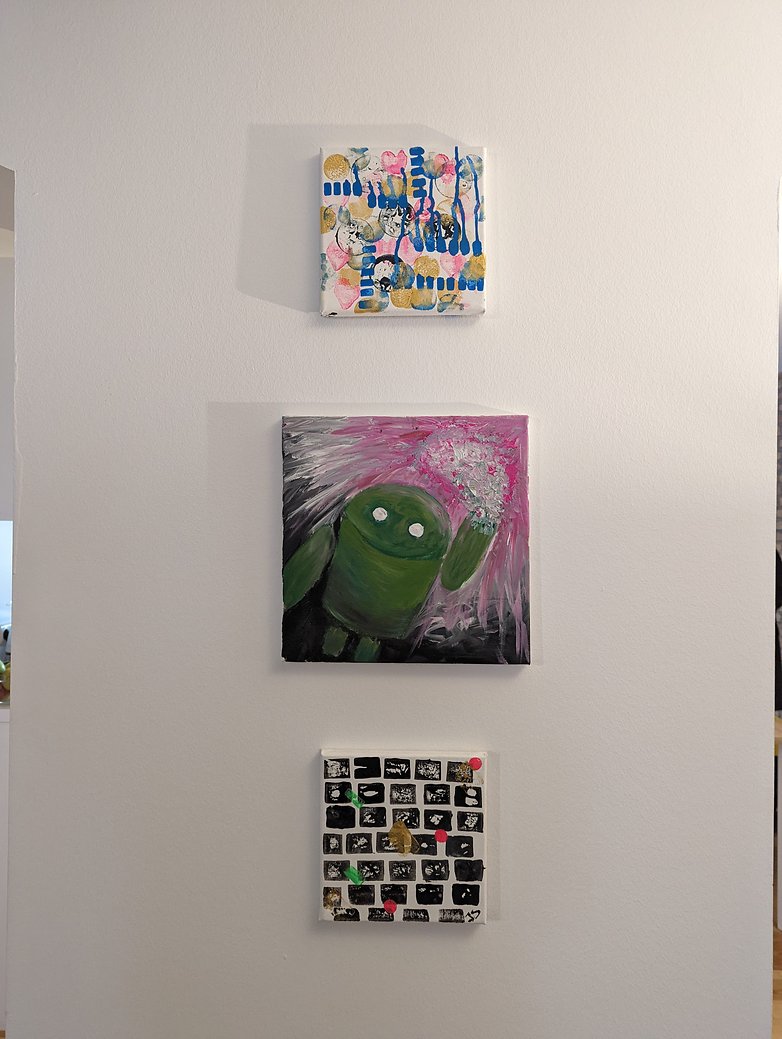

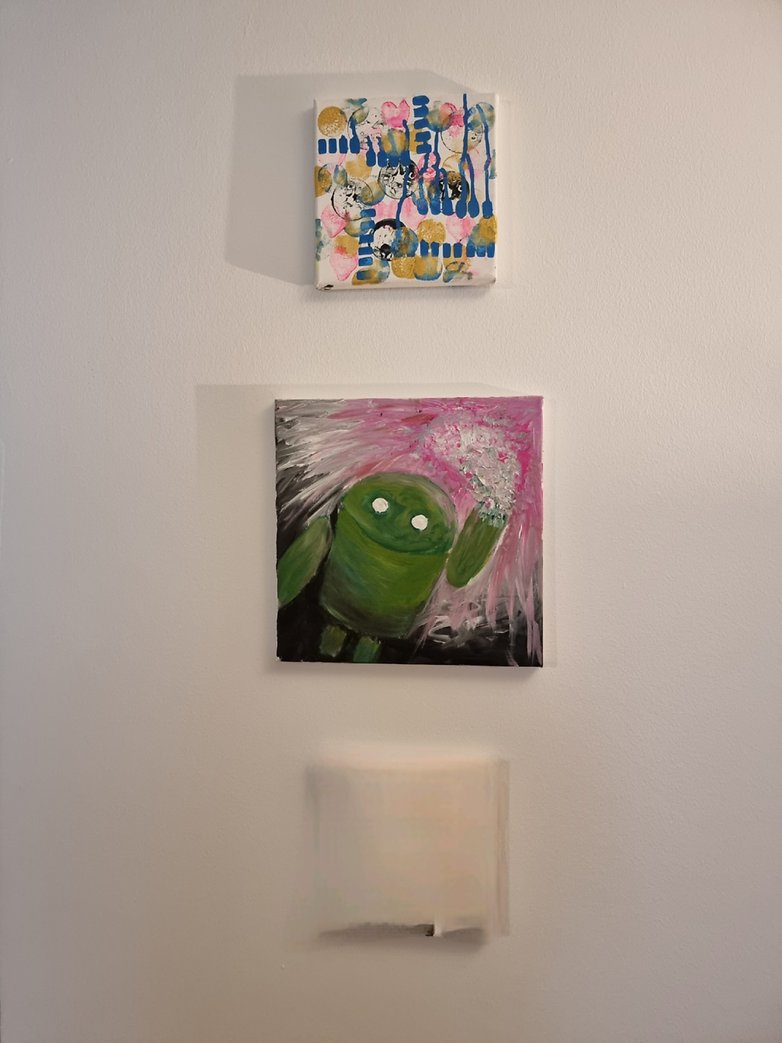

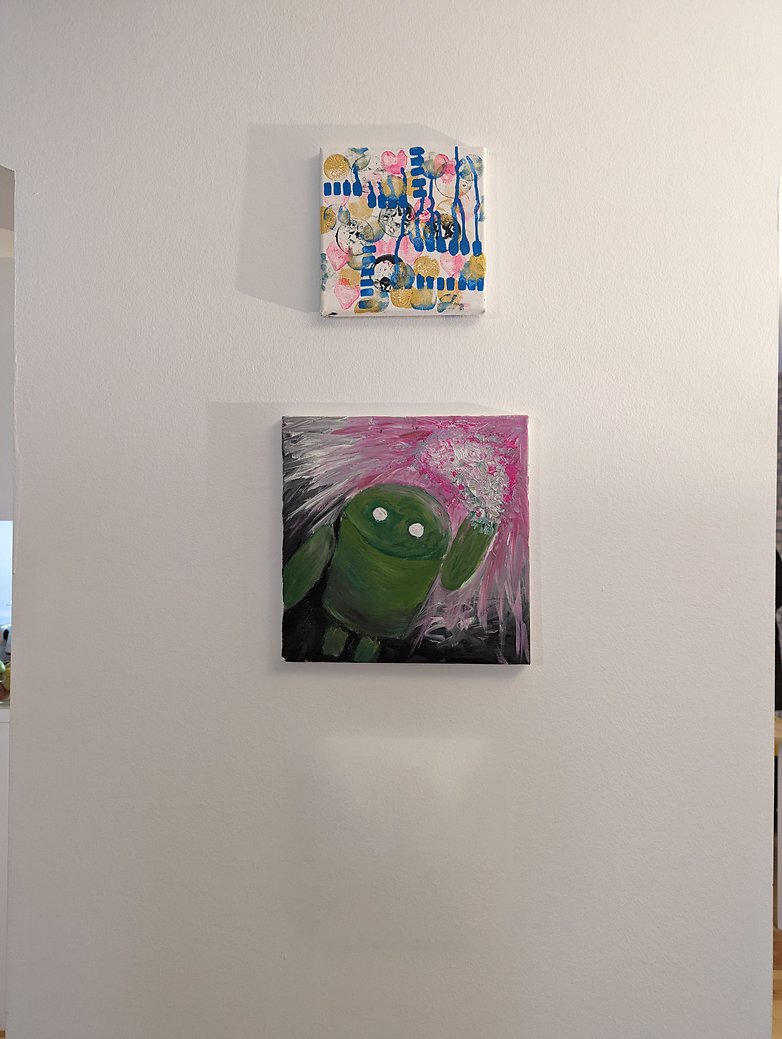

Example 1: Picture from the wall

Not all examples are as perfect as Google would like them to be. That's because the company places emphasis on you being able to remove distracting elements from the background. In the following example, the distracting element is located in the foreground. However, removing it shouldn't be too difficult, right? After all, the area that does need to be replaced is monochrome in nature and is best softened with a slight brightness gradient.

Adobe's tool takes first place here in an obvious way here, consequently picking up three points. Following Adobe, Google is awarded two points while Samsung comes in last! So there's only one point for Samsung, who left quite a bit of the image behind.

Example 2: Zois freezes and magic erasers have to fix it

In the next picture, the fan in the background was intentionally placed, as Zois is caught on camera working intently. While he wasn't bothered by the fan, it triggered me. The background here is also monochrome, but the offending object is a bit more complex to 'erase'. But since I took the photos using Portrait Mode, the fan is a bit blurry.

Again, Adobe performed the best here in my eyes. The fan is completely removed and the shading is best matched to the wall. Google has also removed the fan, but there are some nasty artifacts left behind. Samsung still left something as well, and once again, it will only receive one point. Adobe three, Google two, and Zois is sweating!

Example 3: More complex background in front of a door

Why is there a garbage bag in front of the entrance? It doesn't matter, it bothered me a lot in my photo of Camila, so I had to digitally remove it. Here's a winner other than Photoshop at long last!

This is because Google's Magic Eraser delivered the best results here. The trash bag is completely gone and the background has been reconstructed quite well. The image is exciting because you can see how Adobe works, as Photoshop picks another image element and tries to intelligently place an overlay on top of the background. Google gets three points, Samsung scores two, and Adobe receives only one.

Example 4: Camila has turned on the lights!

My fourth example would be easy to replace on the computer with the copy stamp as the background is composed of a single color and here, you just have to brush over it quickly. As expected, the overall results were really good!

The clear winner is Adobe with its "copy-and-paste strategy"! Here you really can't see anything of the lamp that remains. With Google and Samsung, you can still see some shading. The two features are about even here, so I'm awarding each the same score. Adobe gets three points, Samsung and Google two each.

Example 5: Simply make annoying e-scooters disappear

With the next experiment, I fulfill the wish of many Berliners! That is because I had one of the annoying e-scooters which plague the cityscape erased. Once again, the photo was taken using Portrait Mode, so expect some blurring in the background.

Adobe is the winner again! You can't see a shadow here, nor any image flaws, nor is there anything of the scooter to be seen. Since Google created the most artifacts and hit the window sill a bit, Google ends up last. Samsung consequently receives two points, Google one, and Adobe is king of the hill yet again with three.

Example 6: Can I delete my (fictional) ex-girlfriend from photos?

The question I'm sure we all asked ourselves during Google's presentation: Can I finally delete the ex-girlfriend or ex-boyfriend from shared vacation pictures? This task is a mammoth one, especially when it comes to shared photos. Because there is a lot that needs to be reconstructed here, and not just a failed relationship! For this test, Camila and I recreated such a picture.

Samsung's result here is more painful than any previous memory of the relationship! Google does serve a good replacement for the image in the background but messed up my jacket. Adobe copied the edge of my cap several times on my sleeve. All three results are useless, but Samsung's the worst of the lot. Therefore, Google and Adobe will share the spoils at two points apiece and only one for Samsung.

Example 7: Cleaning up important Berlin landmarks

The Berlin Wall Monument close to the NextPit office was meant to memorialize the Berlin Wall! Unfortunately, it's not always treated with respect and there was a mask in the grass in front of it. Will the smartphones and computer program manage to weed out this impurity?

This is where I would declare Samsung the overall winner for the first time! The Galaxy S21 Ultra was able to recreate the lawn in the most realistic manner, simulating the darkness of the grass as well. Adobe was a little less good at this, and on Google's Pixel 6, you can clearly see a dark patch where the mask used to be. Samsung gets three points here for its effort, Adobe two points, and Google nets one point!

Example 8: Difficult test with a helmet in the grass

In the last example, I put a very high contrast subject in the grass. In doing so, it takes up quite a bit of space on the image. So let's see again just how well it could be replaced.

Google did design a nice grassy knoll here, but you can still see the outline very clearly. Samsung has made a nice painting, but it doesn't look like an eraser. Only Adobe was able to convince my eyes here, and very well at that. Therefore, three points for Adobe and only one each for Google and Samsung.

Example 9: Will the eraser turn me into a ghost?

Another test that I personally found to be very funny. Can I also erase myself with the Magic Eraser tool? A mirror selfie sets the stage here and let's see if I'm still there!

Well, thank goodness I'm still visible in every photo! There really are hardly any clues here for the algorithms to work with among all three programs. Basically, the results end up being disastrous. I give all three a consolation point!

Conclusion: Profi-Tool is ahead!

For my evaluation, I added up the points of all three competitors. The final results are looks as follows:

- Adobe with 21 points

- Google with 15 points

- Samsung with 14 points

Apparently, it was a close race between the two smartphones, with Adobe making both of them look pretty out of date. It is important to mention that Samsung's feature is still in beta. It is here where I'm really surprised by Google's effort, as Google is well known for its camera software and computational photography. Google's feature did disappoint me a bit as the results looked far more impressive in the product videos.

We went with Adobe when it comes to the magic eraser tool as it is the closest to Google and Samsung in terms of user-friendliness. There's another "Content-Aware Fill" tool where you have a bit more control. With this, we could have avoided bloopers like the reproduced jeans-wearing leg in the garbage bag photo. This is where Adobe is able to really flex its muscles.

However, Google needs more data for better results, and the company is most likely to obtain that from its users. So it remains to be seen whether Google can train its artificial intelligence further in the future. Do you want to know how the photo quality of the two smartphones differsin detail? Then take part in our camera blind test or check out the results later.