How YouTube's algorithm facilitates child exploitation

YouTube's history with content moderation has been troubled to say the least. The video sharing website has often been slow to react to obscene and inappropriate content, even when the videos in question were aimed at children. Who can forget the infamous ElsaGate scandal or Logan Paul's suicide forest video? Yet, neither of them are as shocking as the fact that YouTube's video algorithm has been found to facilitate the sexual exploitation of minors.

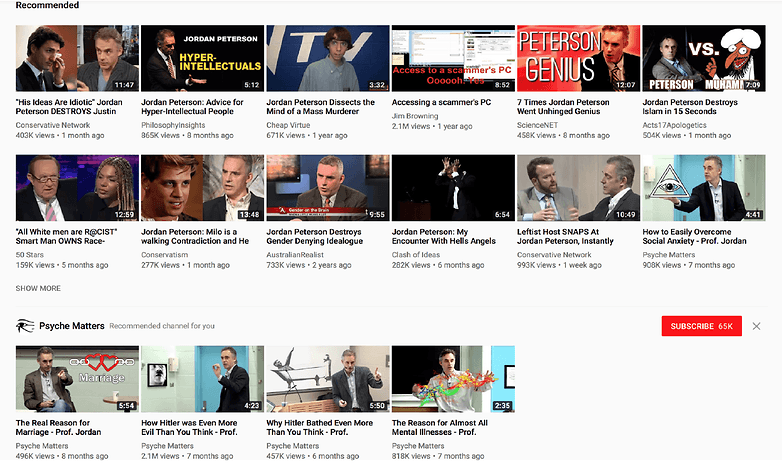

This was first discovered by YouTuber MattWhatItIs (Matt Watson). In a video on the subject, he demonstrates how a relatively innocuous search term like 'bikini haul' followed by a few clicks on related videos can lead you to what he describes as a pedophilia "wormhole". He used a brand new YouTube account and a VPN, so no previous searches would affect the recommendations. The result - both the sidebar and the front page were filled with videos of minors, some in various states of undress.

We have decided not to directly embed the video in this article. Warning: if you decide to watch it, you can do so here, but you should know that it includes inappropriate content. Nevertheless, it demonstrates how the YouTube algorithm works in favor of child predators very clearly.

Watson's experiment is disturbingly easily to replicate and I had a very similar experience when trying what he described in the video. What's the cause behind it? The obvious culprit is YouTube's algorithm. If you've ever clicked on a top list video, you likely know how aggressive it can become in recommending the same type of content over and over. Although this is just speculation, it's possible it was designed this way to encourage binge watching and therefore generate more revenue for the platform through advertisements. However, it is also likely that it was never intended to be as pushy as it currently is.

Either way, YouTube should be aware of how easily the algorithm can be exploited. Matt Watson's experiment also revealed that there were plenty of innocent videos (kids doing gymnastics, talking in front of the camera) that were hijacked by a pedophile ring. Predators would post disgusting sexual comments, as well as time stamps to compromising or suggestive positions. Not only that - often the videos that showed up in these recommendations were reuploads, many of which were monetized.

What steps is YouTube taking?

This is happening despite YouTube's promises to heavily moderate any content aimed toward or involving children. Last year, the video sharing platform resolved to completely disable comments under children's videos if inappropriate comments were spotted. At the time the company also removed adverts from over 2 million videos and 50, 000 channels masquerading as family entertainment. Yet, as we can see thanks to Matt Watson the system can still fail.

Even when there are attempts from YouTube to get things under control, many innocent content creators get caught in the crossfire. Only days ago, the YouTube algorithm falsely and unceremoniously terminated several popular Pokemon GO channels because it mistook the abbreviation CP (combat points) for something much more sinister.

In case anyone at @TeamYouTube is taking notes on today's mishap, CP stands for Combat Points. I'm on board with fighting back against inappropriate content, but your algorithm needs a lesson in CONTEXT.

— Nick // Trainer Tips (@trnrtips) February 17, 2019

Also, just to reiterate, MANUAL REVIEW BY A HUMAN BEFORE TERMINATION pic.twitter.com/qHLP5GGe9J

This just highlights the limitations of existing algorithms and AI. What can YouTube or Google really do about it? 400 hours of video are uploaded to the website every minute. Even artificial intelligence can struggle under the weight of so much data. YouTube has just become such a juggernaut in the online video market that it's impossible to police all content quickly and accurately. Yet, limiting the number of videos which can be uploaded could cripple the platform completely, considering Google is already losing money with YouTube.

- YouTube tells content creators: "3 strikes and you're out"

Is hiring more people the answer to the problem? This is a step which the company already made in 2017, taking 10, 000 people on board with the express purpose of cleaning up YouTube. According to The Telegraph, the video sharing website also works with a Trusted Flagger community, comprised of law enforcement agencies and child protection charities. However, over a 60-day period the community raised concerns in 526 cases but only received responses to 15 of their reports.

One member told The Telegraph: "They [YouTube] have systematically failed to allocate the necessary resources, technology and labour to even do the minimum of reviewing reports of child predators in an adequate timeframe." They also suggested that YouTube needs to "change their stance from reactive to proactive" if anything is to change.

It's not hard to agree with what they have to say - a first and important step for becoming more proactive would be anticipating the presence of such content and tweaking the algorithm accordingly - making it harder to discover or removing it completely. We might be expecting a bit too much, however - YouTube has not even commented on Matt Watson's findings, nor responded to his hashtag #YouTubeWakeUp which has been showing up in responses to the company's tweets.

What you can do

Of course, YouTube is responsible for what happens on its platform. Children under the age of thirteen are technically not allowed to have an account on the website unless it's created via Family Link, but this is hard to enforce. This is why parents need to take responsibility too, especially if they upload videos of their kids or let them use YouTube unsupervised. There are no excuses - as more and more millennials are becoming (or already are) parents, they should both know the dangers the Internet poses and have the technical know-how to monitor their children's online activity.

Simply talking to your children and explaining that not everyone is well-meaning online can also go a long way. Although the web is quite different from when I first begun using it, my parent taught me not to reveal personal details about myself to strangers, not to engage with people behaving strangely and to avoid chat rooms in general. I took this advice to heart and it definitely made for a safer online experience.

So, yes, while it's disgusting that the sexual exploitation of minors has been taking place right under YouTube's nose, we should take steps to protect children ourselves. Over-reliance on the algorithm which facilitated the problem in the first place is not helpful, and unless Google and YouTube actively work to change it, it will likely do more harm than good.

What is opinion on the matter? Were you aware of the problem? Let us know in the comments.

Does anybody know about Common Sense Media?

From Wiki:

Common Sense Media (CSM) is a San Francisco based non-profit organization that provides education and advocacy to families to promote safe technology and media for children.

Common Sense Media reviews books, movies, TV shows, video games, apps, music, and websites and rates them in terms of age-appropriate educational content, positive messages/role models, violence, sex and profanity, and more for parents making media choices for their kids. Common Sense Media has also developed a set of ratings that are intended to gauge the educational value of videos, games, and apps.

I am no more a small boy. But I still use YouTube in "strict mode", which filters out all bad content and disables comments. Because I get very annoyed quickly even at the sight of disturbing images and videos. For research purpose, whenever I feel I should have opinion of others, I copy url of that video and open it in incognito mode of my browser to read comments, for thats the only way.

Similarly I use search engines in strict mode. I don't use Google search but instead rely on StartPage (primarily) and Duckduckgo.

If you would like to know, there is an easy way to make Startpage your default search engine in Google Chrome / Microsoft Edge browser for ANDROID.

There is no direct solution because StartPage works differently.

Just Google "How do I make Startpage the default search on Chrome for Android?"

Then click the corresponding link to the help page made available by StartPage.

Actually I discovered that method and for that the company behind StartPage offered me 1 year of free subscription to Start Mail, a private and encrypted email service, which I humbly refused, because unconditional selfless service to people is my way of life which keeps me happy.