Google Gemini Live: A Moon Landing Moment for AI on Smartphones?

Google announced today four new smartphones from the Pixel 9 series, a new Pixel Watch in two sizes for the first time, and new Pixel Buds. While the hardware sounds like a full evening program, the real star is an entirely different one: Gemini—and especially Gemini Live. Is this the moon landing moment for artificial intelligence?

What is Gemini—and How Far Can It Go?

Let's take a step back: Google brings together a somewhat confusing number of different things under the umbrella of Gemini. On the one hand, there are the generative AI models Gemini Nano, Gemini Flash, Gemini Pro and Gemini Ultra. These models progress in ascending versions; the most powerful model is currently “Gemini 1.5 Pro”, which outperforms the competition from OpenAI & Co. in various AI benchmarks.

However, Gemini has also been called Google's chatbot, formerly known as Bard, since the beginning of 2024. And that chatbot is now getting a language version called “Gemini Live” in the style of the legendary Voice Mode of ChatGPT 4o, which was announced one day before Google I/O in May 2024. It is still not even available as a broad beta, making headlines more for creepy failures than for a surprise appearance.

By the way, Gemini also refers to various subscription models. “Gemini” alone is the free access to the Google AI called Gemini based on the “Gemini Pro” model. However, you only have access to the aforementioned “Gemini 1.5 Pro” with the “Gemini Advanced” subscription model for $19.99 per month—or you can subscribe to Google One AI Premium. I won't even start with Gemini Business at this point. But now to the supposed moon landing.

Gemini Live: The “Star” of the Show

In addition to the thirty-four different Geminis, there is another feature of the same name that points the way to the coming years: Gemini Live. This is a so-called conversational model that allows for natural conversations—rather than simply exchanging turn-based voice messages with the AI model, each of which is transcribed as text or output via voice output. The difference in dynamics is like comparing chess to a sprint race.

In the live demo at the “Made by Google” event, Jenny Blackburn asked for a fun and educational activity for her niece and nephews in the field of chemistry, including a touch of magic. The suggestions were a magic volcano, a homemade lava lamp or invisible magic ink.

Jenny chose the magic ink, which in the course of the following conversation developed into black light ink, was given the project name “Secret Message Lab” and the assurance not to make too much of a mess while experimenting.

Less than the pure result, which could easily have been googled, it was the journey that was really impressive. With Gemini Live, the Internet becomes your conversation partner—and in the future, your own life too, which can now also be searched using Gemini AI thanks to several new features.

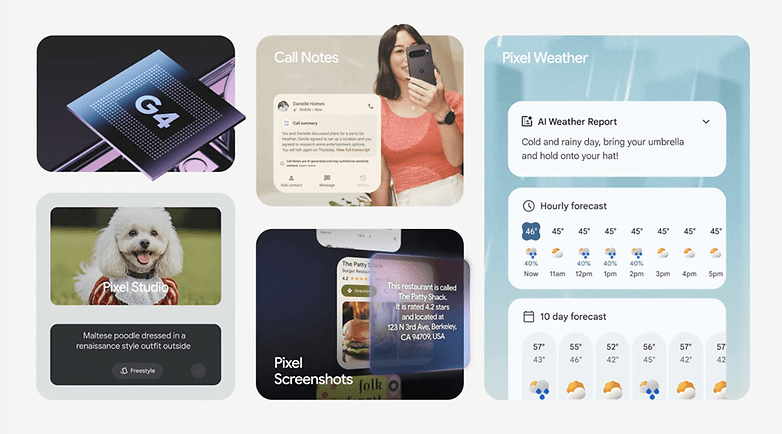

The “Call Notes” function, for example, transcribes your phone calls after a hint for your conversation partner and allows you to search through them afterwards. “Pixel Screenshots” transforms your neglected collection of screenshots of supposedly important things into a searchable database of personal notes. And with the Workspace Extensions, you can talk to your Google Calendar as well as your data from emails, tasks or Google Keep.

The “problem”: Gemini Live requires the powerful language model Gemini 1.5 Pro, which runs in the cloud. If you use AI models to extract details from your universe of personal Google Workspace data, transcriptions, etc., then this is only done locally—with Gemini Nano. However, there is a huge data protection gap with the cloud-based Gemini 1.5 Pro. We have asked Google for a statement on this and will update the article as soon as we have received feedback.

Gemini and the Data Protection Gap

While Gemini, Latin for “twin”, actually stands for the partnership between Google's two AI labs DeepMind and Brain, the name could also be seen as an involuntary description of the local-to-cloud divide.

In plain language: If you start chatting with Gemini Live in English in the Gemini app for Android (yes, of course the app is called that), the AI model running here has no access to your personal data from your email, calendar etc. And this is unlikely to change when Gemini Live becomes available in other languages and even for iOS in the coming weeks and months.

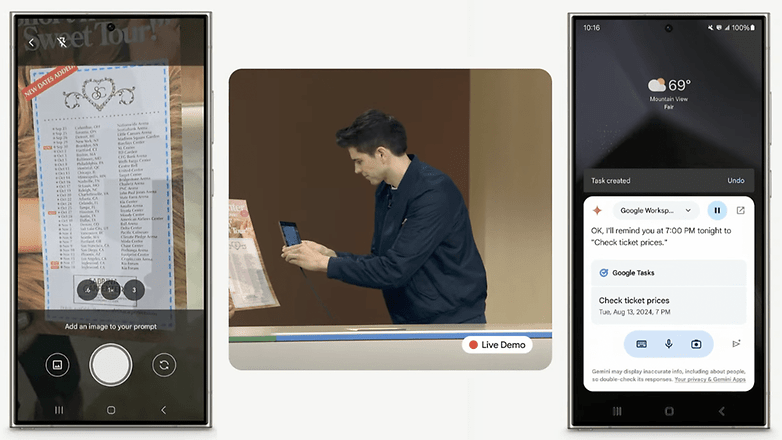

If you want to ask Gemini if you can attend a concert based on a photograph of a poster, you have to type your query like in the Stone Age or use voice input. Because although the locally running Gemini Nano model has access to your personal data, it doesn't have enough power for real-time conversations.

- Also interesting: Google Pixel 9, Pixel 9 Pro (XL) and Pixel 9 Pro Fold in comparison

Is Gemini Live the Moon Landing in the “AI Race”?

In the space race of the 60s and 70s, NASA had a space program called “Gemini”, which paved the way for the first moon landing in 1969 with the subsequent Apollo program. Coincidence? Hardly, because the ten voices available for Gemini Live at launch were given English-language names for star constellations: Vega, Dipper, Ursa & Co.

So while Google is reaching for the stars and also has an ex-NASA engineer on stage at its after-party, there is still one piece missing from the moon landing. The carefully forged link between the most private user data in the locally running Gemini models and the powerful cloud models that enable natural-looking conversations.

Google has already announced the next step with Project Apollo Astra: Here, Gemini Live is to be given access to the camera as already shown at Google I/O and then also gradually integrate apps such as Google Calendar.