Google I/O: Enters the (AI)rena with AI Colleagues and AI Agents

Open AI tried to take the wind out of Google's AI sails on Monday evening with its new GPT-4o model. The latest ChatGPT version should not only be able to process text but also feature seamless conversations, recognize emotions, and process input via live video. Google has struck back at its I/O developer conference with a futuristic AI broadside.

How strong is Google's AI model?

What ChatGPT is to OpenAI, Gemini is to Google. At least in the AI arena, Google's most powerful AI model, Gemini Pro 1.5, is well ahead of current GPT-4 models. According to Google, Gemini Pro 1.5 can process 1 million tokens, while the various GPT-4 models can process a maximum of 128,000 tokens. This number of tokens refers to how much information the respective model can store for the current task.

However, this says very little about the lap time on the Nurburgring as the horsepower of a sports car. It also depends, for instance, on how the information can be processed effectively, i.e. how efficiently is information extracted from videos and made available for additional processing.

According to Google, 1 million tokens can be used to capture 1,500 PDF pages, 30,000 lines of code, or one hour of video. Google will soon be constructing a Gemini model with 2 million tokens available to selected developers in various developer environments. Alphabet CEO Sundar Pichai has a clear goal: "Infinite Context", i.e. a total memory for AI models.

Which Google products will receive new AI features?

All of the above is just theoretical. What will change in the various Google services, you ask? There were a few concrete announcements at Google I/O, some of which were even available immediately. Other announced products came with rather soft release dates such as "in the next few months", and it is often not clear whether the rollout of AI features will apply to the global market or only to the USA at first.

Google also has its "Gemini Advanced" payment service. How does this work? Those who have opted for the AI premium package of the Google One service for $20 each month will receive some of the features earlier or even for the first time. Gemini Pro 1.5 is now offered in 35 languages, with the expansion to 2 million tokens arriving "sometime later this year".

In a nutshell: Google's AI portfolio is a jumbled patchwork, and I'm afraid that won't change any time soon. Let's dive into the fray!

Google Workspace: Welcome, AI colleagues

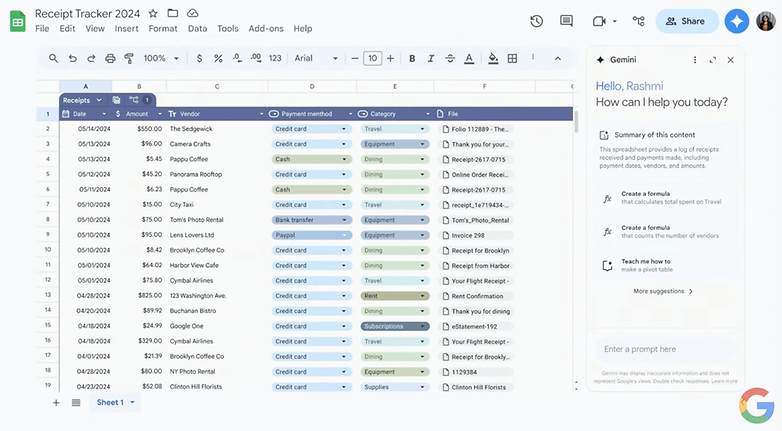

Google Workspace is a wide range of office applications from Google Sheets to Google Presentations and, of course, everybody's favorite Gmail. At last year's Google I/O, we were presented with Gemini Assistant, designed to write texts and create smart tables full of formulas or presentations. English language Google accounts were already able to use the features with Gemini Advanced, and the so-called side panel will be "generally available" from next month. Does this mean different languages or non-paying users? We do not know.

It is cool that it will be possible to 'talk' with the Gmail inbox across the board in the future. If you have received various offers from another business person, the artificial intelligence will search for the different conditions and possible time periods, summarize them, and take you directly to the right place at the touch of a button so that you can either ask otehr relevant questions or accept or reject them directly. This special feature will be available to all users from "July".

Another exciting aspect of Google Workspace is how the AI features will work across different tools in the future. At the keynote, there was the example of a freelance photographer whose email inbox was overflowing with unread messages containing invoices. In the side panel, a suggestion was to clear up this mess: create a Google Drive directory with this invoice and 37 others, plus a spreadsheet with an overview and classification of the invoices. The cool thing is that after approval was given, this process was not only executed but was automatically created as an automated script. However, such multi-platform automated scripts will not come with the side panel, but in September. It will initially be available for Labs users, i.e. beta testers. Is there anything else you noticed from here?

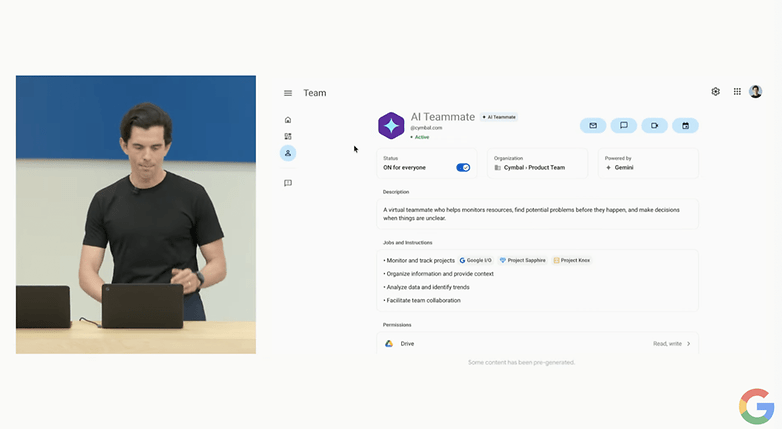

Things will get wild from 2025

Google also had a few announcements in store for next year. In the future, it will be possible to add AI team members to company accounts — including a job title, status, team unit, and, of course, a name. You can then add these AI employees to Google Chats, share them in Drive directories, set them to CC in emails, and so on. From there, you can assign tasks related to the available information to them. When asked "Are we 'on track' with our product launch?", the AI colleague will create a timeline and directly highlight potential problems. Google also promised 3rd party support, allowing companies to pre-configure certain types of AI colleagues.

Project Astra and personal AI agents

In future, you will also have your own AI employees outside of Google Workspaces that function as a type of personal assistant. These are so-called "agents". In this context, this term means an AI model can devise a logical strategy and act according to a specific plan. For example, you can say, "I would like to return the shoes I bought online yesterday." An agent then searches your emails for the appropriate invoice, triggers a return shipment via the online store in question, and produces the return label.

Even more spectacular was the demo of Project Astra, which, according to Google, was filmed in real-time without any cuts made on a smartphone. One after the other, a camera in an office was used to search for objects that made noises, explain a component of a loudspeaker, generate an alliteration for a pen collection, explain the encryption method used in the code displayed on the computer screen, and recognize the London district where the office is located while looking out of the window. The highlight? Following the video with numerous panning shots, the AI was also able to answer the question of where the glasses had been placed.

Speaking of glasses, Deepmind founder Demis Hassabis exclaimed, "Imagine this with smart glasses!" to the audience, who was allowed to give this part of the presentation.

Google search gets an AI boost

From today, Google is rolling out a whole host of new functions for Google Search in the USA. These features should be available to more than one billion users by the end of 2024. At the heart of the new Google search lies the so-called "AI Overviews", which are strongly reminiscent of the Perplexity app. Here, Google summarizes certain information about a topic on a dynamic screen and shows links to the respective sources.

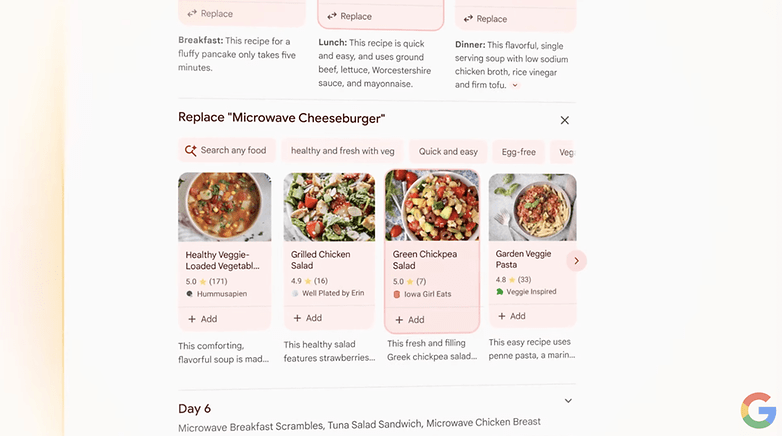

Anyone looking for a nutrition plan, for instance, is presented with a weekly plan and various recipes, accompanied by additional links. If a recipe is not suitable, it can be quickly replaced via a click. For more complex feedback, there is a text field to type prompts such as "Integrate more vegetarian options". It is important that Google Search always understands the context. In addition to recipes, these AI Overviews will initially also include restaurant tips, followed by parties, dates, training plans, travel, shopping, and more.

Another special feature of Google search is that complex issues can be described directly. You no longer have to ask for a nutrition plan but can enter how the meals should be prepared quickly and using only a microwave.

Multimodal search with videos

In the future, it will also be possible to search not only by text but also via video and voice. There was an impressive example of this on stage at the opening keynote. Rose Yao filmed a record player in which the tone arm always jumping to the park position. In response to the question: "What's the problem here?", the Google search query recognized the record player model and the problem and was able to link directly to websites with suggested solutions. When will this multimodal search via video and voice roll out? It remains a mystery at press time.

Android 15 with lots of AI

Somewhere in the midst of 121 mentions of "AI", Google also spoke briefly about the upcoming Android 15. However, the focus here was not surprising at all. AI, what else? Users are already familiar with AI features such as Circle to Search, which will be available on 200 million Android devices by the end of 2024. Google also wants to replace the Google Assistant with Gemini and you will then be able to input and output text, voice, images, and videos directly. Google also promises a multimodal version of Gemini Nano. This Gemini version runs locally and is therefore particularly suitable for sensitive content. It will be available on Pixel smartphones later this year and is smart enough to recognize scam calls or explain the screen content to the visually impaired.

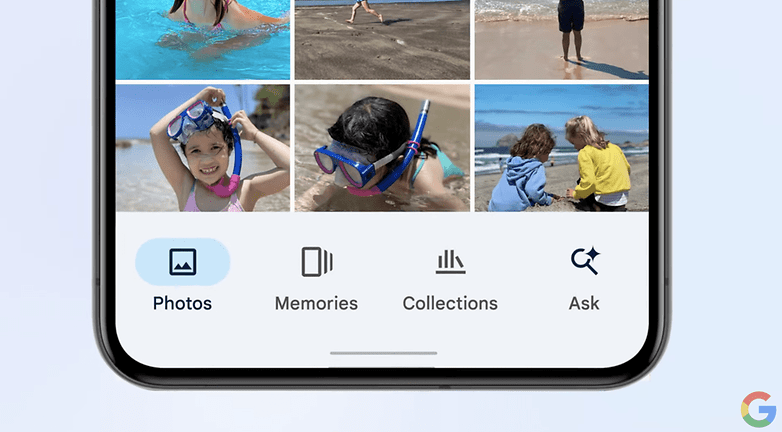

Google Photos is also set to receive new AI features. For instance, you will be able to use "Ask Photos" to talk to your photo collection at some point "from summer" and, for example, ask: "What was the license plate number of my car again?" The AI will then make the best guess of which car belongs to you and present you with a picture of the license plate. Another example from the presentation was the question, "When did my daughter learn to swim?". A series of pictures showing her swimming progress could then be generated directly by command.

A Gemini app is also arriving on Android which, just like the OpenAI model GPT-4o presented on Monday, will also allow natural conversations. Yes, it should also be possible to interrupt the AI that is currently speaking if the conversation is going in the wrong direction. Supposedly, adapting to the user's speech patterns should make the conversations particularly human. Here too, Google is putting this off until some time in the summer.

Will Android 15 Beta 2, which will be available from May 15, already contain any new AI features? We'll find out when the time comes.

AI creativity from video to music

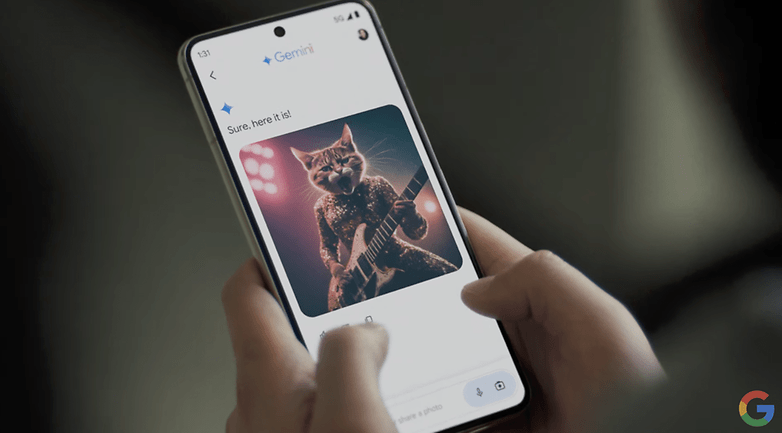

Of course, various creative tools with AI support were also included. After all, what would an AI-heavy keynote from an internet giant be without cat pictures?

- Music AI Sandbox was designed to provide artists with new creative tools. Wyclef Jean, for example, enthusiastically tried transferring music styles to existing tracks, and remix king Marc Rebillet was delighted with the AI colleague who filled empty loops and provided exciting suggestions.

- With Imagen 3, you will be able to generate even more realistic cat images in the future. Prompts should be understood even more accurately and the generation of text for logos, for instance, should also work reliably. Interested users can apply for the feature in ImageFX from today at labs.google.

- Of course, video generation was also a topic. The Veo AI model can be used to generate 1080p videos in a variety of cinematic styles from text, photos, and videos. Filmmaker Donald Glover is currently "shooting" a short film using this. How about the availability? Google also claimed it is arriving "soon".

- In the experimental application Notebook-LM, it will be possible in the future to generate dialogs between two AIs based on stored information. At the keynote, there was a demo where two people discussed Newton's laws by voice. Using the "Join" button, it is possible to join the conversation at any time and steer it in any direction, making the somewhat dry physics discussion explain the basics of physics using a "basketball" example.

Google vs OpenAI: Who won the big AI week?

As the dust settles, who has the better AI, Google or OpenAI? Without triying Gemini Pro 1.5 or the multimodal aspects of GPT-4o out, it's hard to say. One thing is clear: OpenAI has a slew of language models as well as a smartphone and a desktop app now.

What about Google? In addition to its AI models, Google operates, for instance, the two most visited websites in the world are Google and YouTube with 275 billion monthly visits, the most used office suite with three billion installations, and the most popular mobile operating system in the world with a 71% market share. Unlike OpenAI, Google will have no major hurdles in delivering its models to where the use cases are.

We do wonder whether the rumors will be true on June 10 and Google's major competitor announces a partnership with OpenAI at its own developer conference. We'll find out in less than a month at Apple's WWDC, hopefully with fewer promises of "someday".